Getting Started with RSelenium

The value of unstructured data has never been more prominent than with the recent breakthrough of large language models such as ChatGPT and Google Bard. Your organization can also capitalize on this success by building your own expert models. And what better way to collect droves of unstructured data than by scraping it?

This article outlines how to scrape the web using R and a package known as RSelenium. RSelenium is a binding for the Selenium WebDriver, a popular web scraping tool with unmatched versatility. Selenium's interaction capabilities let you manipulate a web page before scraping its contents. This makes it one of the most popular web scraping frameworks.

You'll learn how to get started and scrape both a simple page and a dynamic page.

🤖 Check out how Selenium performs in headless mode vs other headless browsers when trying to go undetected by browser fingerprinting technology in our How to Bypass CreepJS and Spoof Browser Fingerprinting face-off.

Installing RSelenium and Prerequisites

Since the RSelenium package is simply an interface to a Selenium server, you need to have a working Selenium server to use it. Luckily, spinning up a Selenium server on your local machine is very easy, but it requires some preparation.

First, make sure you have created a new R project, which is where you'll keep all the required files outlined below.

Since Selenium runs on Java, make sure you have it installed. You can download it here. Next, download a standalone version of Selenium, and put it in your R project folder. Then, download ChromeDriver and also put it in the R project folder.

Finally, install the RSelenium library in your R terminal:

install.packages('RSelenium')

Open up a command prompt (Windows) or terminal (Linux/Max) and navigate to your project folder. Run the following command, making sure to replace the xs with the version of Selenium you downloaded:

java -jar selenium-server-standalone-x.x.x.jar -port 4444

Scraping Static Pages

Now that all the prerequisites are behind you and your Selenium server is running, it's time for some web scraping examples.

First, let's try scraping some static pages, which are pages that require no interaction like clicking certain DOM elements, scrolling, logging in, or the presence or absence of specific cookies.

Start your Selenium client in R with the following code:

rem_driver <- remoteDriver(remoteServerAddr = 'localhost',

browserName = 'chrome',

port=4444L)

rem_driver$open()

rem_driver$navigate("https://www.scrapingbee.com/blog/")

The code connects to your local server via port 4444 and spins up a Chrome browser. If all goes well, a Chrome window will launch on your machine, and it will navigate to our web scraping blog, which will be used for all the examples in this section.

Accessing Elements

There are various approaches to accessing and selecting elements on a web page. If you're familiar with frontend development, you'll likely get déjà vu because RSelenium's functions map perfectly to JavaScript's element-selection methods. Three approaches are discussed below: selection via ID, class, and CSS selectors.

Selecting a single element by ID is the most straightforward approach. It's done by using the findElement method and specifying two arguments—using and value. The snippet below selects an element that contains a lot of content and stores it in elem_content:

elem_content <- rem_driver$findElement(using = 'id', value='content')

In the sections that follow, you'll learn how to extract an element's attributes and inner text. For now, let's see how you can select a single element by class, which is similar.

The code snippet below selects a single blog post by referring to the p-10 class:

elem_blog_posts <- rem_driver$findElement(using = 'class', value='p-10')

However, a class isn't unique to a single element, so you need an extra method to select multiple elements: the findElements method. It operates in exactly the same way as the findElement method, but it will return all found elements in a list. Accessing a single element within this list requires you specifying an index:

elem_blog_posts <- rem_driver$findElements(using = 'class', value='p-10')

elem_third_blog_post <- elem_blog_posts[[3]]

Finally, the most flexible way for selecting elements is by specifying a CSS selector. Once again, you'll use the findElements method. However, by specifying css to the using argument, you can be really creative. The code snippet below selects the links to the most popular articles:

elem_popular <- rem_driver$findElements(using = "css", "div.max-w-full > a[href^='/blog/']")

Extracting Attributes and Inner Text

Now that you know about the three approaches to selecting elements, let's switch to extracting information: attributes and inner text.

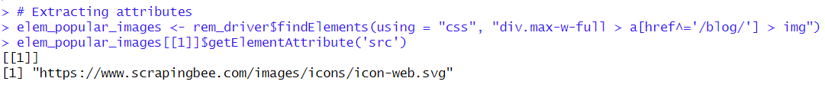

The first piece of information you'll extract is an image link.

The code snippet below creates a list that contains elements that hold all the images and icons of the most popular blog posts. Selecting the first element via its index lets you use the getElementAttribute method and specify the attribute you'd like to extract. Specifying the src attribute lets you get the image link.

elem_popular_images <- rem_driver$findElements(using = "css", "div.max-w-full > a[href^='/blog/'] > img")

elem_popular_images[[1]]$getElementAttribute('src')

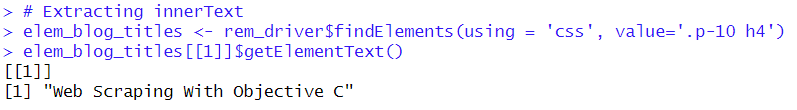

Another piece of information that is often scraped is the inner text of an element. In the code snippet below, all blog titles are put in a list. It extracts the title, which is the text within each element, by using the getElementText method:

elem_blog_titles <- rem_driver$findElements(using = 'css', value='.p-10 h4')

elem_blog_titles[[1]]$getElementText()

Scraping Dynamic Pages

Scraping dynamic pages usually requires extra effort, such as clicking a specific element or scrolling down to a section of the page. In the example below, we'll scrape the top five Substack blogs in the business category.

First, navigate to Substack using the same method as before:

rem_driver$navigate("https://substack.com")

Clicking an Element

Since this is a dynamic website, you need to render the business blogs first by clicking on the corresponding menu item. The following snippet selects the menu item and uses the clickElement method to click on it:

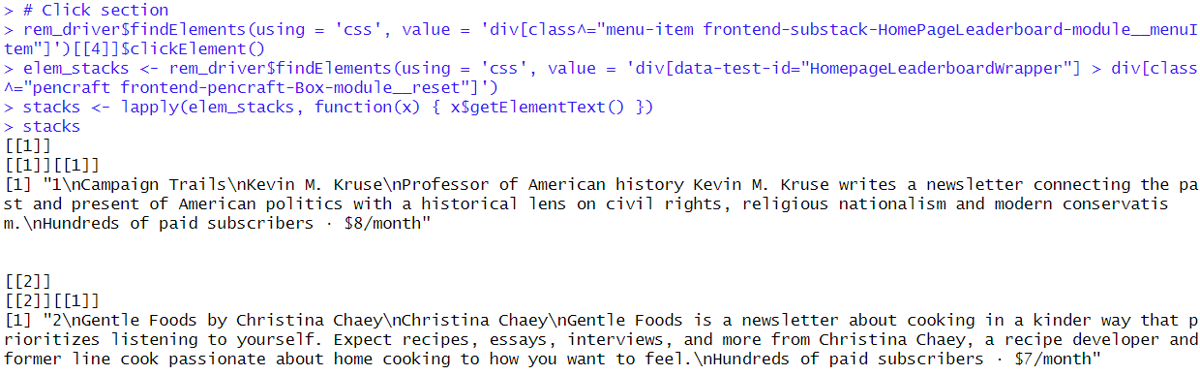

rem_driver$findElements(using = 'css', value = 'div[class^="menu-item frontend-substack-HomePageLeaderboard-module__menuItem"]')[[4]]$clickElement()

Now that the top five business blogs are visible, you can select and scrape them from the page.

The code snippet below once again uses the findElements to find the elements with CSS selectors. It then uses R's lapply to apply the getElementText method on the five selected elements:

elem_stacks <- rem_driver$findElements(using = 'css', value = 'div[data-test-id="HomepageLeaderboardWrapper"] > div[class^="pencraft frontend-pencraft-Box-module__reset"]')

stacks <- lapply(elem_stacks, function(x) { x$getElementText() })

Entering Text

Clicking an element is only a single interaction. Sometimes, you would also need to input some text. Luckily, that's also something RSelenium can handle.

The code snippet below enters the text data science in Substack's search bar and then presses the enter button:

rem_driver$findElement(using = 'css', value = 'input[name="query"]')$sendKeysToElement(list("data science", key = "enter"))

It renders the search results page for the search term data science.

When entering text and clicking does not suffice, you can always inject JavaScript into a website, which gives you the full flexibility to manipulate the DOM before scraping a page.

However, keep in mind that scraping enormous amounts of data can be a challenge since a website's server typically blocks IP addresses or asks for CAPTCHA confirmation when it receives too many requests. For these use cases, you'll need to turn to more professional tools.

Conclusion

This article explained how you can use RSelenium for scraping websites by selecting elements and extracting their attributes and inner text. Since Selenium simulates a full browser experience, it's useful for interacting with and extracting information from a dynamic website, as you saw.

However, RSelenium also has its limits. If you prefer not to have to deal with rate limits, proxies, user agents, and browser fingerprints, check out Scrapingbee's no-code web scraping API. The first 1,000 calls are free!