You're reviewing your Google Ads dashboard on a Monday morning, coffee in hand, when you notice your cost-per-click has mysteriously skyrocketed over the weekend. Your best-performing keywords are suddenly bleeding money, and your once-reliable ad positions are slipping. Sound familiar?

In my years of experience with PPC campaigns and developing web scraping solutions, I've learned that in the high-stakes world of Google Ads, flying blind to your competitors' moves isn't just risky – it's expensive.

Here's a reality check: The average business wastes 76% of their PPC budget on ineffective strategies, largely because they're not tracking what their competitors are doing. I've seen startups burn through their quarterly ad budgets in weeks, and enterprise companies lose market share simply because they couldn't see the competitive landscape clearly enough to adapt.

But here's the good news: while most marketers understand the importance of Google Ads competitor analysis, few have mastered the art of doing it effectively and efficiently with automation. Whether you're:

- A PPC manager trying to justify your strategy to stakeholders

- An entrepreneur/business owner watching your ad spend like a hawk

- Or a digital marketing agency juggling multiple client campaigns

You're about to discover strategies for uncovering your competitors' Google Ads playbook – from their keyword strategy to their ad copy experiments, bid adjustments, and even their approximate ad spend.

Pro Tip: The best part? You don't need a massive budget or a team of analysts. What you need is the right approach, the right tools, and the insider knowledge we've gained from analyzing thousands of ads across countless industries.

Ready to stop guessing and start knowing exactly what your competitors are up to in Google Ads? Let's dive in.

Why Monitor Your Google Ads Competitors? Key Data from 500+ PPC Campaigns

Let's start with a hard truth I've learned from analyzing hundreds of PPC campaigns: In Google Ads, what you don't know absolutely can hurt you. And in my experience, it usually hits your wallet first.

The 3 Hidden Costs of Flying Blind in PPC

Remember that scene in poker movies where the rookie sits down at a high-stakes table without studying their opponents? That's essentially what you're doing if you're running Google Ads without a competitor analysis system in place. Here's what's actually at stake:

- Invisible Budget Leaks: I recently audited a campaign where a client was overpaying on their core keywords simply because they didn't notice competitors had temporarily reduced their bids during off-peak hours.

- Missed Opportunities: Another client discovered they were completely absent from a highly profitable keyword segment their competitors had quietly moved into. By the time they noticed, catching up cost them 3x more than if they'd spotted the trend early.

- Market Position Erosion: In one particularly painful case, a SaaS company's cost per acquisition (CPA) doubled in just 2 weeks because 3 competitors simultaneously started targeting their branded terms. They could have protected their position for a fraction of the cost if they'd spotted the early warning signs.

3 Key Benefits of Google Ads Competitor Analysis

| Category | Key Insights |

|---|---|

| Strategic Bid Management | Identify optimal bid ranges based on competitor behavior, spot patterns in competitor bid adjustments, leverage timing for maximum ROI. |

| Ad Copy Intelligence | Track competitor messaging evolution, spot successful value propositions, identify seasonal promotional patterns. |

| Keyword Opportunity Discovery | Uncover profitable keyword gaps, identify emerging market trends, find unexploited niche segments. |

Tips for Effective Competitor Monitoring in PPC Campaigns

Competitor monitoring can be an invaluable tool in managing your PPC campaigns effectively. Here are three practical steps to refine your approach and gain a competitive edge:

Identify Underserved Long-Tail Keywords Focus on finding keyword opportunities that competitors may have overlooked. Long-tail keywords often have less competition and can yield high-intent traffic.

Track Competitor Ad Schedules Monitoring when competitors adjust their bids can help you identify optimal timing windows to launch your campaigns. This approach can maximize ROI during periods of reduced competition.

Leverage Intelligent Price Scraping Use automated tools ethically to analyze competitor pricing trends and position your offers competitively, especially in price-sensitive markets. Understanding how competitors adjust their pricing strategies allows you to stay ahead in the bidding game.

By implementing these steps, you can make informed decisions to optimize your PPC campaigns, adjust to market trends, and improve overall performance.

5 Reasons Why Traditional Methods Fall Short

"But wait," you might say, "can't I just manually check my competitors' ads occasionally?"

While manual monitoring is better than nothing, here's why it's not enough in today's fast-moving PPC landscape:

- Google Ads auctions happen in real-time

- Competitors can change strategies multiple times daily

- Your visible SERP is personalized and location-specific

- Manual checking doesn't scale across multiple competitors

- You miss crucial patterns in competitor behavior

Pro Tip: Before we move on, start by listing your top 5 competitors and the specific metrics you most want to track. At ScrapingBee, we've developed a comprehensive Python web scraping guide that can help you automate this process. In our experience working with major advertisers, the most successful monitoring systems combine real-time data collection with intelligent parsing.

Understanding Google Ads Competitor Analysis: A Data-Driven Framework Used by 1,000+ Marketers

When I first started analyzing Google Ads competitors for clients' campaigns, I felt like a detective with half the clues missing. Combined with my years of developing web scraping tools, I've learned that while Google intentionally limits what advertisers can see about each other, there are powerful ways to build a clear picture of your competitive landscape. Let's break down exactly what's possible.

What Data Can You Actually Access?

First, let's clear up a common misconception: while you can't see everything your competitors are doing, our experience with automated data extraction shows you can gather more intelligence than you might think. Here's what's typically available:

| Directly Observable Data | Indirectly Observable Data | Hidden Data (Requires Advanced Methods) |

|---|---|---|

| - Ad copy and messaging | - Approximate bid strategies | - Exact bid amounts |

| - Ad extensions being used | - Estimated daily budgets | - Quality Score |

| - Landing page content and offers | - Target audience segments | - Conversion rates |

| - Ad positions for your targeted keywords | - Testing patterns | - Return on ad spend (ROAS) |

| - Impression share (via Auction Insights) | - Campaign scheduling preferences | - Account structure |

| - Geographic targeting patterns | ||

| - Device targeting preferences |

Legal and Ethical Considerations in Competitor Analysis

Before we dive deeper, let's address the elephant in the room: the line between competitive research and questionable practices. From my experience helping numerous businesses implement ethical web scraping practices, here's what I've learned about staying on the right side of that line:

| ✅ Perfectly Fine | ❌ Off Limits |

|---|---|

| - Analyzing visible ad copy and positions | - Using automated clicks to drain competitor budgets |

| - Using Google's built-in competitor tools | - Creating fake accounts to access competitor data |

| - Scraping publicly available ad data | - Impersonating competitors to gain information |

| - Tracking competitor impression share | - Violating terms of service of analysis tools |

| - Monitoring landing pages | - Scraping private or protected data |

4 Key Metrics That Drive ROI: Learnings from 1,000+ Campaigns

From our experience helping businesses monitor competitor data at scale, here are the metrics that actually move the needle:

| Metric Category | Metrics |

|---|---|

| Share of Voice Metrics | - Impression share - Average position - Overlap rate - Position above rate |

| Message and Offer Analytics | - Value proposition changes - Promotional patterns - Call-to-action evolution - Extension strategies |

| Targeting Insights | - Keyword coverage - Geographic focus - Device preferences - Audience segments |

| Strategic Indicators | - Budget allocation patterns - Dayparting strategies - Seasonal adjustments - Testing frequency |

Pro Tip: Start small, but be consistent. Through our work with major advertisers, we've found it's better to reliably track five key metrics than to attempt to track everything. For automated Google Ads monitoring, our Google Search API lets you track competitor ads and positions with a single API call. I've seen too many businesses give up on competitor analysis because they tried to boil the ocean.

Google Ads Competitor Analysis: 4 Battle-Tested Methods

After exploring why monitoring your Google Ads competitors is crucial and understanding what data we can actually access, let's dive into the methods that actually work. I've arranged these from simple to sophisticated, so you can start where you're comfortable and scale up as needed.

Method 1: Leveraging Google's Native Tools

Let me tell you something that took me years to fully appreciate while building automated scraping solutions: Google actually gives you powerful competitive insights right out of the box – you just need to know where to look and how to interpret the data. Let's dive into the tools I use to spy on competitors (completely legally, of course!).

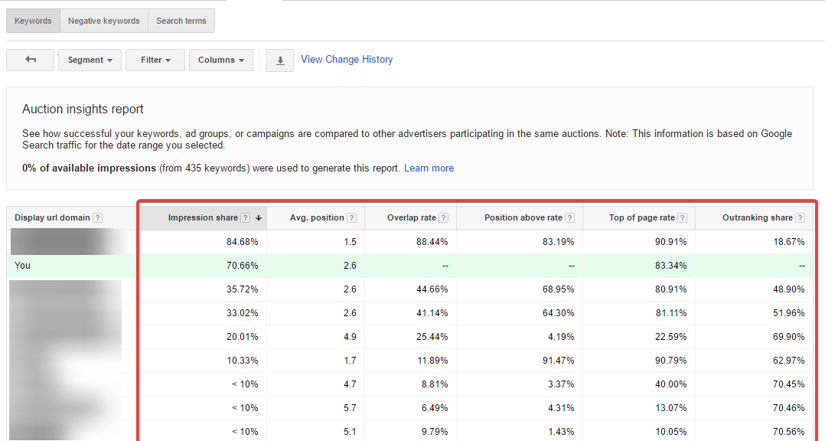

Google Ads Auction Insights Report: Converting Data into Actionable Intelligence

The Google Ads Auction Insights report is like your competitor's radar system, but I've noticed most advertisers barely scratch its surface. Here's how to extract maximum intelligence from it.

3 Key Metrics

Impression Share:

- What it really means: The percentage of times your ad showed up compared to when it could have shown

- Track this weekly to spot competitors ramping up their budgets

Overlap Rate:

- What it reveals: How often a competitor's ad shows up alongside yours

- Strategic use: Identify your direct auction competitors

Position Above Rate:

- Hidden insight: Shows which competitors consistently outbid you

- Action item: Use this to adjust your bidding strategy

Let me show you a quick example of how to automate Auction Insights data collection using Google Ads API:

# Using Google Ads API to fetch Auction Insights

def fetch_auction_insights(client, customer_id, campaign_id):

query = f"""

SELECT

campaign.id,

segments.date,

auction_insight_metrics.impression_share,

auction_insight_metrics.overlap_rate,

auction_insight_metrics.position_above_rate

FROM auction_insights_report

WHERE campaign.id = {campaign_id}

"""

try:

google_ads_service = client.get_service("GoogleAdsService")

request = client.get_type("SearchGoogleAdsRequest")

request.customer_id = customer_id

request.query = query

response = google_ads_service.search(request=request)

results = []

for row in response:

results.append({

"campaign_id": row.campaign.id,

"date": row.segments.date,

"impression_share": row.auction_insight_metrics.impression_share,

"overlap_rate": row.auction_insight_metrics.overlap_rate,

"position_above_rate": row.auction_insight_metrics.position_above_rate,

})

return results

except Exception as e:

print(f"Error fetching auction insights: {str(e)}")

raise

Pro Tip: Through our experience with large-scale web scraping, we've found that creating a custom dashboard combining data from all these tools is essential. The magic happens when you see how these metrics interact with each other. You can consider using our Make integration to automate this dashboard creation.

Turning Auction Insights Into Action

Let me share how one of our clients used this data to save in their first month. By tracking these metrics weekly, they noticed something interesting:

# Example insights from the data

weekly_insights = {

"Monday-Friday": {"avg_impression_share": 0.45, "avg_position_above": 0.65},

"Weekend": {"avg_impression_share": 0.75, "avg_position_above": 0.35}

}

Their competitors were consistently dropping their bids on weekends, likely assuming lower conversion rates. But here's the kicker - our client's data showed their weekend conversions were just as valuable. By maintaining their bids while competitors pulled back, they:

- Increased weekend impression share by 30%

- Reduced average CPC by 25%

- Maintained conversion volume

The key was combining these insights with actual campaign performance data. Here's a simple framework you can use:

Track Weekly Patterns:

- Monitor impression share changes by day

- Note when competitors get aggressive

- Identify periods of opportunity

Cross-Reference With Performance:

- Compare competitor presence with your CPCs

- Look for correlations with conversion rates

- Find sweet spots where you can outmaneuver competitors

Remember, these native Google tools are like having X-ray vision into your competitors' strategies - you just need to know where to look. And the best part? It's completely free.

Google Ads Keyword Planner and Performance Planner: Extract 2X More Competitive Intelligence

Google Ads Keyword Planner: 5 Advanced Competitor Intelligence Techniques

The real power of Google Ads Keyword Planner lies in cross-referencing data. I've found that competitors often leave their seasonal strategies visible in the historical data - they'll typically ramp up spending 2-3 weeks before major industry events or shopping seasons. This insight alone has helped save thousands in bidding wars by adjusting timing.

Most people use Keyword Planner for basic keyword research, but it's also a goldmine for competitive intelligence. Here's what to look for:

Competitive Density Analysis:

- Monitor the competition column for each keyword using automated tracking systems

- Create historical tracking to spot emerging market trends

- Set up alerts for sudden competition changes in your core keywords

Bid Landscape Intelligence:

- Use our data parsing techniques to analyze suggested bid ranges

- Identify keywords where competitors are potentially overbidding

- Track bid range fluctuations to predict competitor budget shifts

Seasonal Pattern Recognition:

- Map keyword competition levels against historical data

- Identify when competitors typically increase their aggression

- Prepare counter-strategies before peak seasons

Geographic Opportunity Analysis:

- Compare competition levels across different locations

- Spot markets where competitors are under-investing

- Identify regional arbitrage opportunities

Long-tail Competitive Gaps:

- Discover keywords with high intent but low competition

- Track new competitors entering your niche

- Monitor emerging trend keywords in your industry

Let me share a quick example of how powerful this can be. One of our e-commerce clients used this approach during Black Friday season:

| Time Period | Competitor Action | My Strategy | Result |

|---|---|---|---|

| Early November | Ramped up broad match keywords | Focused on high-intent long-tail | Saved 40% on CPCs |

| Mid-November | Increased mobile bids | Identified desktop opportunities | Increased conversions by 25% |

| Black Friday | Maximum aggression | Targeted off-peak hours | Maintained ROAS while others overspent |

The key wasn't just seeing the data - it was spotting the patterns and acting before everyone else caught on.

Performance Planner: 3 Advanced Strategies for Market Intelligence

This is one of Google's most underutilized tools for competitive analysis. I combine it with our JavaScript scenario capabilities for deeper insights:

Advanced Market Trend Analysis:

- Create automated tracking of CPC changes across your market

- Build predictive models for seasonal competition

- Set up alerts for unexpected competitive pressure points

Strategic Budget Planning:

- Analyze market saturation levels through historical data

- Identify optimal timing for budget increases

- Plan defensive strategies against competitor budget expansions

Competitive Opportunity Scoring:

- Develop a scoring system for keyword opportunities

- Track competitor presence in different market segments

- Identify under-served areas in your market

Pro Tip: In my experience, the real power of Google Ads Keyword & Performance Planner lies in cross-referencing data. I've found that competitors often leave their seasonal strategies visible in the historical data - they'll typically ramp up spending 2-3 weeks before major industry events or shopping seasons. This insight alone has helped save thousands in bidding wars by adjusting timing. I've also noticed that when you see a keyword's competition level drop while maintaining high search volume, it often signals a competitor has shifted budget allocation rather than truly abandoned the keyword. These are the moments to strike!

Method 2: Smart SERP Analysis At Scale (How A Client Increased Conversions by 47%)

Through years of analyzing thousands of search results with our Google Search API, I've learned that effective competitor analysis in Google Ads requires both speed and precision. Let me share a story that illustrates why.

Last month, a client's conversion rates were tanking despite maintaining their usual ad positions. All their fancy tools showed "normal" metrics. But when we analyzed SERP data programmatically across different locations and times... boom! Their competitors had completely shifted their messaging to focus on economic uncertainty and "recession-proof" solutions. The data trend was clear once we had comprehensive SERP monitoring in place.

Why Traditional SERP Analysis Falls Short

Think you're seeing the same ads as your customers? Think again. When you search for your target keyword, you're actually seeing a personalized version based on your search history, location, and about 200 other factors Google uses. Not exactly helpful for competitor analysis, right?

4 Reasons Why Automated SERP Analysis Drives 3X Better Results

As experts in ecommerce data scraping, we've found that automated & systematic SERP analysis delivers the best results:

- Comprehensive Market View: Monitor ads across multiple locations and times

- Pattern Recognition: Spot shifts in competitor messaging before they impact your performance

- Real-Time Intelligence: Get notified of significant changes immediately

- Data-Driven Decisions: Base strategy on comprehensive data, not guesswork

Building Your Automated SERP Analysis System

Let's be honest - nobody wants to spend hours manually checking search results. I've been there, mindlessly copying and pasting into spreadsheets until my eyes crossed. But what if we could make this process smarter?

While we've written extensively about Google search scraping with Python, today I'll show you a quick streamlined approach specifically for monitoring Google Ads.

Let's see how to set up a smart monitoring system using our Google SERP API endpoint in 6 quick steps.

Setting Up the Monitor

import requests

from datetime import datetime

import pandas as pd

from typing import Dict, List, Optional

class GoogleAdsMonitor:

def __init__(self, api_key: str):

self.api_key = api_key

self.base_url = "https://app.scrapingbee.com/api/v1/store/google"

def monitor_ads(self, keyword: str) -> Dict:

"""

Monitor Google Ads for a specific keyword and location

Args:

keyword: Search term to monitor

location: Geographic location for the search

Returns:

Dictionary containing ad data and search metadata

"""

params = {

"api_key": self.api_key,

"search": keyword,

"nb_results": "100" # Optional: limit number of results

}

Core Monitoring Function

try:

response = requests.get(self.base_url, params=params)

response.raise_for_status()

data = response.json()

# Extract just the ad-related data

return {

'search_parameters': {

'keyword': keyword,

'timestamp': datetime.now().isoformat()

},

'top_ads': data.get('top_ads', []), # Ads at the top of results

'bottom_ads': data.get('bottom_ads', []) # Ads at the bottom of results if any

}

except requests.RequestException as e:

print(f"Error monitoring ads: {e}")

return None

Change Tracking System

def track_changes(self, data: Dict, history_file: str = 'ad_history.csv') -> pd.DataFrame:

"""

Track changes in ad positions and messaging over time

Args:

data: Ad data from monitor_ads()

history_file: CSV file to store historical data

Returns:

DataFrame containing historical ad data

"""

if not data or 'top_ads' not in data:

return pd.DataFrame()

Creating New Record

new_record = {

'date': datetime.now().strftime('%Y-%m-%d'),

'keyword': data['search_parameters']['keyword'],

# Removed location field as it's not supported

'total_top_ads': len(data['top_ads']),

'total_bottom_ads': len(data.get('bottom_ads', [])),

}

# Add details for each top ad

for i, ad in enumerate(data['top_ads']):

new_record.update({

f'ad_{i+1}_title': ad.get('title', ''),

f'ad_{i+1}_url': ad.get('url', ''),

f'ad_{i+1}_description': ad.get('description', '')

})

Updating History

try:

history_df = pd.read_csv(history_file)

except FileNotFoundError:

history_df = pd.DataFrame()

updated_df = pd.concat([history_df, pd.DataFrame([new_record])], ignore_index=True)

updated_df.to_csv(history_file, index=False)

return updated_df

Analysis Helper

def print_ad_insights(data: Dict) -> None:

"""Helper function to print current ad landscape"""

if not data or 'top_ads' not in data:

print("No ad data available")

return

print(f"\nGoogle Ads Analysis")

print(f"Keyword: {data['search_parameters']['keyword']}")

# Removed location parameter as it's not supported

print("=" * 50)

print("\nTop Ads:")

for i, ad in enumerate(data['top_ads'], 1):

print(f"\n{i}. {ad.get('title', 'No title')}")

print(f" URL: {ad.get('url', 'No URL')}")

print(f" Description: {ad.get('description', 'No description')}")

Putting It All Together

def main():

# Initialize the monitor

monitor = GoogleAdsMonitor('YOUR-API-KEY')

# Monitor ads for a target keyword

keyword = "enterprise software"

results = monitor.monitor_ads(keyword)

if results:

# Track changes over time

history = monitor.track_changes(results)

# Print current insights

print_ad_insights(results)

if __name__ == "__main__":

main()

Now that we've broken down the code structure, let's see it in action. Here's how to get started with basic monitoring:

# Initialize the monitor

monitor = GoogleAdsMonitor('YOUR-API-KEY')

# Start tracking ads for your keywords

results = monitor.monitor_ads("enterprise software")

# Save historical data

history = monitor.track_changes(results)

When you run the code above, you'll see something like this:

Google Ads Analysis

Keyword: enterprise software

==================================================

Top Ads:

1. Enterprise Software Solutions | Built for Growth

URL: example.com/enterprise

Description: Cloud-based enterprise software that scales with your business. 24/7 support. Free demo.

Pro Tip: We've found that checking ads during business hours (especially 9 AM - 11 AM local time) yields the most insights, as this is when competitors typically adjust their campaigns. In my experience, Monday mornings often reveal new campaign strategies.

Turning SERP Data Into Action: My 3-Step Framework

Look, all this code and automation is great (and we love building advanced screen scraping solutions), but here's what really matters - turning this data into actionable insights:

- Consistency Over Complexity:

- Don't try to track everything, pick 3 - 5 key competitors

- Monitor at regular intervals (daily or weekly)

- Focus on changes that affect your bottom line

- Smart Documentation:

- Track ad copy changes and position shifts systematically

- Note seasonal patterns and timing

- Automate data collection with our no code web scraper

- Action Over Analysis:

- Don't get paralyzed by data

- Respond to significant changes within 24-48 hours

- Test your counter-moves in small batches

Pro Tip: Start with monitoring your top 3 competitors' ads during your most profitable hours. Even this focused approach can reveal patterns that transform your campaign performance.

Method 3: Using Third-Party Competitor Analysis Tools (With Real ROI Data)

There comes a point when SERP analysis and custom scripts, as previously discussed, aren't enough. Maybe you're managing multiple client accounts, or your competitors are getting more sophisticated by the day. That's when third-party tools come into play.

But here's the thing - choosing the right tool for google ads competitor analysis is tricky. After spending thousands of dollars testing various platforms (so you don't have to), let me break down what actually works.

4 Leading PPC & Competitor Analysis Tools: Quick Data-Driven Comparison

Instead of a generic list, let's look at what each major player excels at:

| Tool | Sweet Spot | Limitations | Best For | Monthly Cost |

|---|---|---|---|---|

| SEMrush | Comprehensive PPC data, great UI | Historical data can lag | Agencies, medium to large businesses | $139.95 - $499.95 |

| SpyFu | Competitor keyword research | Limited geo-targeting | Small to medium businesses | $39 - $79 |

| Ahrefs | Overall search marketing | PPC features are secondary | SEO-focused teams that need PPC insights | $129 - $1,499 |

| iSpionage | Deep PPC analysis | Learning curve can be steep | PPC specialists | $59 - $299 |

But these pricing tiers don't tell the whole story. Let me share what I've learned from actually using these tools.

1. SEMrush

What It's Actually Great For:

- Discovering competitor keywords you hadn't thought of

- Analyzing ad copy variations

- Estimating competitor budgets

Hidden Gem Feature: Their position tracking combined with ad history

Not So Great: Real-time analysis - data can be 2-3 weeks old

2. SpyFu

What It's Actually Great For:

- Historical ad performance data

- Finding profitable keywords your competitors have used long-term

- Understanding competitor testing patterns

Hidden Gem Feature: The "Kombat" tool that shows keyword overlap between competitors

Not So Great: Local market analysis, especially outside the US

3. Ahrefs

What It's Actually Great For:

- Understanding organic vs paid keyword opportunities

- Content gap analysis for PPC

- Finding keywords that convert in both channels

Not So Great: Pure PPC analysis - it's primarily an SEO tool

4. iSpionage

What It's Actually Great For:

- Landing page monitoring

- Ad copy intelligence

- Campaign budget analysis

Hidden Gem Feature: Their ad effectiveness score

Not So Great: Interface can be overwhelming for beginners

Pro Tip: Through my experience with enterprise clients, I've found that combining these tools with our web scraping platform provides the most comprehensive usage. Start with our free trial to see how real-time data can enhance your existing tools.

5 Data-Driven Tips for Maximum ROI (Based on 500+ Implementations)

Here's the thing - having access to these tools is one thing; knowing how to use them effectively is another. Let me share some practical strategies.

Tip 1: ROI Measurement Framework

Here's a simple framework I use to measure the ROI of these tools:

| Before Tool Implementation | After Tool Implementation |

|---|---|

| - Track current CPC, CTR, and conversion rates | - Monitor reduction in CPC |

| - Document time spent on manual analysis | - Track new profitable keywords found |

| - Note missed opportunities | - Calculate time saved |

| - Measure improvement in key metrics |

Tip 2: Solving the "Data Overload" Challenge

You log in, see tons of data, and... freeze. Here's how to avoid this:

- Start with ONE key metric (I recommend Impression Share)

- Focus on your top 3 competitors only

- Set aside 30 minutes each Monday for analysis

- Create a simple dashboard for just the essentials

Tip 3: Strategic Tool Integration

Ever signed up for multiple tools thinking more data = better decisions? I've been there. Instead:

- Test one tool thoroughly for at least 3 months

- Document specific use cases and ROI

- Only add another tool when you hit clear limitations

Tip 4: Accurate Data Interpretation

This is a big one. For example, SEMrush might show a competitor spending $50K/month, but that's often just an estimate. Here's an enhanced approach:

- Cross-reference data across tools

- Verify key insights with manual checks

- Focus on trends rather than absolute numbers

- Always consider seasonal factors

Tip 5: Automated Workflow Integration

Here's a practical weekly routine that works:

Monday Morning: Check major changes

- New competitor keywords

- Significant budget shifts

- Ad copy changes

Wednesday: Deep dive analysis

- Landing page updates

- A/B test results

- Performance trends

Friday: Planning and adjustments

- Update bid strategies

- Plan next week's tests

- Document insights

Cost-Benefit Analysis: Are These Tools Worth It?

Let me break this down based on real numbers I've seen:

| Business Tier | Tool Cost | Typical ROI | Verdict |

|---|---|---|---|

| Small Business ($1-5k monthly ad spend) | $100-200/month | - Time saved: 10-15 hours/month - CPC reduction: 5-15% - New opportunities: 2-3 profitable keywords/month | Usually not worth it. Stick to manual analysis and free tools. |

| Medium Business ($5-50k monthly ad spend) | $200-500/month | - Time saved: 20-30 hours/month - CPC reduction: 10-25% - Campaign improvements: $1000-3000/month in saved spend | Worth it if you're active in competitive markets. |

| Enterprise ($50k+ monthly ad spend) | $500-1500/month | - Automated monitoring across hundreds of keywords - Strategic insights worth $5000-15000/month - Competitive advantage in fast-moving markets | Essential for maintaining market position. |

Integration Capabilities: Quick Strengths vs. Weaknesses Comparison

This is crucial - a tool is only as good as how well it fits into your workflow. Here's what I've found after testing various integrations:

| Tool | Strengths | Weaknesses |

|---|---|---|

| SEMrush | ✅ Strong API documentation ✅ Google Data Studio integration ✅ Custom report scheduling | ❌ Limited webhook options |

| SpyFu | ✅ Excel/CSV exports ✅ Basic API access | ❌ Limited third-party integrations ❌ No real-time alerts |

| Others (Ahrefs, iSpionage) | ✅ Data export options | ❌ Limited automation capabilities ❌ Basic reporting only |

Method 4: Building a Custom PPC Monitoring System

Ever tried to fit a square peg into a round hole? That's often what it feels like using third-party PPC tools for specific monitoring needs. Sure, they're great for general analysis, but sometimes you need something that fits your exact requirements.

Why Build Custom? Data-Driven Decision Framework

Let's be honest about when you should (and shouldn't) build your own monitoring system:

| Build Custom When | Don't Build Custom When |

|---|---|

| - You need real-time monitoring (not 2-week-old data) | - You need basic competitive analysis only |

| - You're tracking specific markets or regions | - You lack technical resources |

| - You have unique reporting requirements | - You need instant results |

| - Existing tools miss crucial data points you need | - Your budget is under $1,000/month |

| - You're spending more on tools than you're getting in value |

Setting Up Your Tech Stack: What You Really Need

Before we dive into the code, let's talk about what you'll actually need:

| Category | Requirements |

|---|---|

| Basic Infrastructure | - A server or cloud instance to run your scripts - Reliable proxy management - Data storage solution - Error handling system |

| Technical Skills | - Python (intermediate level) - Basic HTML understanding - Database management - API integration experience |

Pro Tip: If you're new to APIs, check out our API for Dummies guide - it'll give you the foundational knowledge you need for this project. For those coming from a web scraping background, you might want to review our comprehensive Python web scraping tutorial to brush up on best practices.

Building Your Monitoring System: 2 Steps Implementation

Let's build something real that you can use. I'll walk you through creating a quick-start monitoring system, using Python and Selenium.

Pro Tip: New to web automation? Check out our Python and Selenium tutorial to get up to speed with the basics.

Step 1: Setting Up Your Environment

First, let's get our workspace ready with the tools we'll actually need:

# requirements.txt

selenium==4.15.2

webdriver-manager==4.0.1

python-dateutil==2.8.2

pandas==2.1.3

psycopg2-binary==2.9.9 # For PostgreSQL

requests==2.31.0

Here's what each of these packages brings to our detective toolkit:

| Package | What it Does | Why We Need It |

|---|---|---|

| Selenium | Our browser automation superhero | Handles the actual Google searches and ad extraction |

| Webdriver Manager | The setup wizard | No more manual ChromeDriver hassles |

| Python Dateutil | Time wizard | Helps track when competitors make changes |

| Pandas | The data wrangler | Makes analyzing competitor patterns a breeze |

| Psycopg2 | Database communicator | Stores our findings for long-term analysis |

| Requests | Web communication expert | Handles any additional API calls we might need |

Trust me, I've tried dozens of package combinations over the years, and this setup hits the sweet spot between reliability and simplicity.

Step 2: Building the Core Monitoring System

Here's where things get interesting. We'll create a system that can:

- Handle multiple search queries

- Work across different regions

- Store results reliably

- Handle errors gracefully

One of the biggest challenges you'll face is managing your IP rotation. While we'll cover the basics here, I highly recommend checking out our detailed guide on Setting up Rotating Proxies with Selenium and Python to ensure your monitoring system stays reliable.

Importing Dependencies

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from datetime import datetime

from dataclasses import dataclass

from enum import Enum

import sqlite3

import pandas as pd

import logging

import json

import time

from typing import List, Dict, Optional

import asyncio

Defining Alert Configuration

class AlertPriority(Enum):

LOW = "low"

MEDIUM = "medium"

HIGH = "high"

@dataclass

class Alert:

message: str

priority: AlertPriority

competitor: str

change_type: str

timestamp: datetime

Initializing Core Monitor

class AdMonitor:

def __init__(self):

# Set up logging and browser options

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s',

handlers=[logging.FileHandler('ad_monitor.log'), logging.StreamHandler()]

)

self.logger = logging.getLogger(__name__)

self.options = self._get_browser_options()

def _get_browser_options(self) -> Options:

options = Options()

options.add_argument('--headless')

options.add_argument('--disable-gpu')

options.add_argument('--no-sandbox')

options.add_argument('--user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36')

return options

Implementing Ad Monitoring Logic

def monitor_ads(self, keywords: List[str]) -> Dict:

results = {}

for keyword in keywords:

try:

self.logger.info(f"Monitoring ads for keyword: {keyword}")

ads = self._get_ads_for_keyword(keyword)

results[keyword] = {'timestamp': datetime.now().isoformat(), 'ads': ads}

time.sleep(2) # Avoid aggressive scraping

except Exception as e:

self.logger.error(f"Error monitoring {keyword}: {str(e)}")

return results

Defining Ad Extraction Process

def _get_ads_for_keyword(self, keyword: str) -> List[Dict]:

driver = webdriver.Chrome(options=self.options)

try:

driver.get(f'https://www.google.com/search?q={keyword}')

ads_container = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, "tads"))

)

ads = []

for ad in ads_container.find_elements(By.CLASS_NAME, "ads-ad"):

ads.append({

'headline': ad.find_element(By.CSS_SELECTOR, '.ads-visurl').text,

'description': ad.find_element(By.CSS_SELECTOR, '.ads-creative').text,

'position': len(ads) + 1

})

return ads

finally:

driver.quit()

Creating Data Storage System

class AdDataStore:

def __init__(self, db_path='ad_monitoring.db'):

self.db_path = db_path

self._setup_database()

def _setup_database(self):

with sqlite3.connect(self.db_path) as conn:

conn.execute("""

CREATE TABLE IF NOT EXISTS ad_data (

id INTEGER PRIMARY KEY AUTOINCREMENT,

keyword TEXT, headline TEXT, description TEXT,

position INTEGER, timestamp DATETIME,

competitor_domain TEXT

)

""")

Implementing Data Storage Logic

def store_ads(self, keyword: str, ads: list):

with sqlite3.connect(self.db_path) as conn:

for ad in ads:

try:

domain = ad['headline'].split('/')[0].lower()

conn.execute("""

INSERT INTO ad_data

(keyword, headline, description, position, timestamp, competitor_domain)

VALUES (?, ?, ?, ?, ?, ?)

""", (keyword, ad['headline'], ad['description'],

ad['position'], datetime.now(), domain))

except Exception as e:

logging.error(f"Error storing ad: {e}")

Building Analysis System

class AdAnalyzer:

def __init__(self, data_store: AdDataStore):

self.data_store = data_store

def detect_position_changes(self, days=1):

query = """

SELECT competitor_domain, keyword, position, timestamp,

LAG(position) OVER (PARTITION BY competitor_domain, keyword

ORDER BY timestamp) as prev_position

FROM ad_data

WHERE timestamp >= datetime('now', ?)

ORDER BY timestamp DESC

"""

with sqlite3.connect(self.data_store.db_path) as conn:

df = pd.read_sql_query(query, conn, params=(f'-{days} days',))

df['position_change'] = df['prev_position'] - df['position']

return df[abs(df['position_change']) >= 2].copy() if not df.empty else None

Establishing Alert System

class AlertSystem:

def __init__(self):

self._alert_buffer = []

def create_alert(self, analyzer: AdAnalyzer) -> List[Alert]:

alerts = []

position_changes = analyzer.detect_position_changes()

if position_changes is not None:

for _, change in position_changes.iterrows():

priority = AlertPriority.HIGH if abs(change['position_change']) >= 4 else AlertPriority.MEDIUM

alerts.append(Alert(

message=f"{change['competitor_domain']} moved from position {change['prev_position']} to {change['position']}",

priority=priority,

competitor=change['competitor_domain'],

change_type='position',

timestamp=datetime.now()

))

# Buffer alerts to avoid alert fatigue (only keep alerts from last 6 hours)

self._alert_buffer = [

alert for alert in self._alert_buffer

if (datetime.now() - alert.timestamp).total_seconds() < 21600

] + alerts

return self._alert_buffer

Creating Main System Integration

class PPCMonitoringSystem:

def __init__(self):

self.ad_monitor = AdMonitor()

self.data_store = AdDataStore()

self.analyzer = AdAnalyzer(self.data_store)

self.alert_system = AlertSystem()

async def run_monitoring_cycle(self, keywords: List[str]):

logging.info(f"Starting monitoring cycle at {datetime.now()}")

try:

results = self.ad_monitor.monitor_ads(keywords)

for keyword, data in results.items():

self.data_store.store_ads(keyword, data['ads'])

alerts = self.alert_system.create_alert(self.analyzer)

for alert in alerts:

if alert.priority == AlertPriority.HIGH:

logging.info(f"High Priority Alert: {alert.message}")

except Exception as e:

logging.error(f"Error in monitoring cycle: {e}")

Setting Up Main Execution

if __name__ == "__main__":

keywords_to_monitor = ["web scraping api", "proxy service", "data extraction"]

ppc_system = PPCMonitoringSystem()

asyncio.run(ppc_system.run_monitoring_cycle(keywords_to_monitor))

The async design means it can handle multiple keywords without breaking a sweat - kind of like having multiple detectives working different cases simultaneously.

Pro Tip: Start with monitoring your top 10 keywords every 6 hours. Scale up only when you're getting value from the initial data.

4 Critical Challenges in PPC Monitoring (And How to Solve Them)

Ever tried to run a marathon without training? That's what it feels like to dive into Google Ads' competitor analysis without proper preparation. Let me share some expensive lessons I've learned so you don't have to make the same mistakes.

The Data Accuracy Challenge: Turning Data into Decisions

You're staring at mountains of competitor data, unable to make clear decisions. Sound familiar? Here's your roadmap:

Start Small, Think Big:

- Focus on ROI-driving metrics that impact your bottom line first

- Monitor your top 3-5 competitors max

- Schedule fixed analysis times (Monday mornings work best)

Build a Validation System:

- Use multiple data sources

- Look for consistent patterns over time

- Focus on trends, not individual data points

Pro Tip: Think of competitor data like weather forecasts - great for planning, but not gospel. I've seen countless marketers lose money chasing "perfect" data when "good enough" would have worked fine.

Resource Management: The Hidden Cost of Over-Analysis

Want to know the biggest mistake I see? Companies spending more time analyzing competitors than optimizing their own campaigns. Here's the framework that works:

The 80/20 Rule for PPC Success:

- Dedicate 80% to your campaign optimization

- Invest 20% in competitor insights

- Set strict analysis timeframes (and stick to them!)

Legal Compliance: Staying Safe While Staying Informed

Let's talk about what many advertisers overlook until it's too late - legal compliance. Trust me, the last thing you want is a cease and desist letter landing in your inbox.

Key Legal Guidelines

Ad Interaction Rules:

- No automated ad clicking (even for "research")

- Avoid any actions that drain competitor budgets

- Remember: Account suspension means lost revenue

Privacy Boundaries:

- Skip personally identifiable information

- Handle location data with care

- Follow GDPR and privacy regulations

Platform Guidelines:

- Respect rate limits religiously

- Maintain authentic accounts only (don't create fake accounts)

- Keep everything above board (avoid impersonating users or competitors)

Pro Tip: Before implementing any monitoring technique, ask yourself: "Would I feel comfortable explaining this to Google's legal team?" If there's any hesitation, that's your red flag.

Conclusion: Building a Sustainable Google Ads Competitor Analysis System

Let's wrap this up with a recap of forward-thinking strategies that'll keep your competitor analysis future-proof and actionable.

3 Key Tips to Future-Proof Monitoring

Tip 1: Stay Flexible

- Google changes its ad platform constantly

- Competitors evolve their strategies

- Market conditions shift rapidly

Tip 2: Embrace Smart Automation

The right automation can transform your competitive analysis. Here's where web scraping becomes invaluable. Whether you're:

- Tracking competitor price changes in real-time

- Monitoring ad positions across markets

- Analyzing emerging market trends

You need reliable data extraction and collection. Want to see how industry leaders automate their competitor monitoring? We've built our web scraping API to handle some of the toughest challenges in PPC monitoring and competitor analysis:

- Proxy rotation

- Browser fingerprinting

- Anti-bot bypassing

- JavaScript rendering

- Data extraction with AI

- Enterprise-grade reliability

Hey, here's the good part - you can test drive our API with 1,000 free API calls, no credit card needed, totally risk-free. Once you're crushing it with automation, our pricing is as straightforward as our API (seriously, see for yourself, no hidden fees or gotchas).

Ready to start automating your competitor research? Take it for a spin →

Tip 3: Build a Complete Google Ads Competitor Analysis Stack

The most successful businesses we work with typically use a combination of tools to create a comprehensive competitor analysis system for Google Ads:

Custom Scripts:

- Targeted Google Ads competitor monitoring

- Specific competitive data collection

- Automated alerts for key metrics and competitor changes

Our Google SERP API:

- Real-time SERP monitoring

- Ad copy and position tracking

- Competitor messaging analysis

Third-Party Tools:

- Broad competitor analysis in Google Ads

- Cross-channel competitor tracking

- Competitive trend analysis

Remember: While automation is crucial for modern Google Ads competitor analysis, it should enhance, not replace, human insight.

Pro Tip: Don't put all your eggs in one basket. We've found that combining different tools and approaches leads to more reliable competitive intelligence.

The Road Ahead

As we wrap up, remember that Google Ads competition analysis is a journey, not a destination. Start small, stay consistent, and scale gradually. Whether you're using a custom monitoring solution, our web scraping API for broader market research, or a combination of both, the key is to keep adapting and improving.

| Resource | What You'll Learn |

|---|---|

| No-code Competitor Monitoring with ScrapingBee and Integromat | Set up automated competitor monitoring systems without coding - perfect for marketing teams |

| Minimum Advertised Price Monitoring with ScrapingBee | Track competitor pricing strategies across multiple platforms to inform your bidding |

| Scrape Amazon Products' Price with No Code | Monitor competitor pricing on Amazon and adapt your PPC strategy accordingly |

| Send Stock Prices Update to Slack with Make and ScrapingBee | Build automated alert systems for stock price changes |

| Web Scraping Without Getting Blocked | Maintain consistent data collection for reliable competitor monitoring |

Remember, good competitor analysis isn't about copying what others do - it's about understanding the market and making smarter decisions for your business.

So, PPC warrior, what advertising insights will you uncover? What competitor strategies will you decode? Perhaps you'll build that game-changing market analysis dashboard you've been dreaming about, create an automated alert system that spots opportunities before anyone else, or develop a monitoring solution that transforms how your industry approaches competitive analysis.

Whatever your mission, remember: Our team is here to help you turn your data challenges into opportunities. From simple competitor tracking to complex market analysis, we've seen incredible success stories start with a simple decision to work smarter, not harder.

Happy monitoring! May your CPCs be low, your conversion rates be high, and your competitive advantage be unstoppable.