Picture this: It's 3 AM, and you're staring at your terminal, trying to download hundreds of data files for tomorrow's analysis. Your mouse hand is cramping from all that right-click, "Save As" action, and you're thinking there has to be a better way. (Spoiler alert: there is, and you've just found it!)

Welcome to the world of file downloads with cURL, where what seems like command-line sorcery to many is about to become your new superpower. As an automation specialist who's orchestrated thousands of automated downloads, I've seen firsthand how cURL knowledge can transform tedious download tasks into elegant, automated solutions — from simple file transfers to complex authenticated downloads that would make even seasoned developers scratch their heads.

Whether you're a data scientist bulk-downloading datasets, a system administrator automating backups, or just a web enthusiast tired of manually downloading files, you're in the right place. And trust me, I've been there – waiting for downloads like a kid waiting for Christmas morning, doing the "please don't fail now" dance with unstable connections, and yes, occasionally turning my terminal into a disco party just to make the waiting game more fun (we'll get to those fancy tricks later!).

Pro Tip: This could be the most comprehensive guide ever written about cURL: we're diving deep into real-world scenarios, sharing battle-tested strategies, and revealing those little tricks that will turn you into a download automation wizard - from simple scripts to enterprise-scale operations!

Want to download thousands of files without breaking a sweat? Need to handle authentication without exposing your credentials? Looking to optimize your downloads for speed? Hang tight...

Why Trust Our cURL Expertise?

Our Experience With File Downloads

At ScrapingBee, we don't just write about web automation – we live and breathe it every single day. Our web scraping API handles millions of requests daily, and while cURL is a great tool for basic tasks, we've learned exactly when to use it and when to reach for more specialized solutions. When you're processing millions of web requests monthly, you learn a thing or two about efficient data collection!

Real-World Web Automation Experience

Ever tried downloading or extracting data from websites and hit a wall? Trust me, you're not alone! These challenges sound familiar?

- Rate limiting

- IP blocking

- Authentication challenges

- Redirect chains

- SSL certificate headaches

These are exactly the hurdles you'll face when downloading files at scale. Through years of working with web automation, we've seen and implemented various solutions to these problems, many of which I'll share in this guide. Our experience isn't just theoretical – it's battle-tested across various scenarios:

| Scenario | Challenge Solved | Impact |

|---|---|---|

| E-commerce Data Collection | Automated download of 1M+ product images daily | 99.9% success rate |

| Financial Report Analysis | Secure download of authenticated PDF reports | Zero credential exposure |

| Research Data Processing | Parallel download of dataset fragments | 80% reduction in processing time |

| Media Asset Management | Batch download of high-res media | 95% bandwidth optimization |

About a year ago, I helped a client optimize their data collection pipeline, which involved downloading financial reports from various sources. By implementing the techniques we'll cover in this guide, they reduced their download time from 4 hours to just 20 minutes!

Before you think this is just another technical tutorial, let me be clear: this is your pathway to download automation mastery. While we'll cover cURL commands, scripts and techniques, you'll also learn when to use the right tool for the job. Ready to dive in?

What This Guide Covers

In this no-BS guide to cURL mastery, we're going to:

- Demystify cURL's download capabilities (because downloading files shouldn't feel like rocket science)

- Show you how to squeeze every ounce of power from those command-line options

- Take you from basic downloads to automation wizard (spoiler alert: it's easier than you think!)

- Share battle-tested strategies and scripts that'll save you hours of manual work (the kind that actually work in the real world!)

- Compare cURL with other popular tools like Wget and Requests (so you'll always know which tool fits your job best!)

But here's the real kicker - we're not just dumping commands on you. In my experience, the secret sauce isn't memorizing options, it's knowing exactly when to use them. Throughout this guide, I'll share decision-making frameworks that have saved me countless hours of trial and error.

Who This Guide is For

This guide is perfect for:

| You are a... | You want to... | We'll help you... |

|---|---|---|

| Developer/Web Enthusiast | Automate file downloads in your applications | Master cURL integration with practical code examples |

| Data Scientist | Efficiently download large datasets | Learn batch downloading and resume capabilities |

| System Admin | Manage secure file transfers | Understand authentication and secure download protocols |

| SEO/Marketing Pro | Download competitor assets for analysis | Set up efficient scraping workflows |

| Student/Researcher | Download academic papers/datasets | Handle rate limits and optimize downloads |

Pro Tip: Throughout my career, I've noticed that developers often overlook error handling in their download scripts. We'll cover robust error handling patterns that have saved me countless hours of debugging – including one instance where a single retry mechanism prevented the loss of a week's worth of data collection!

Whether you need to download 1 file or 100,000+, get ready to transform those repetitive download tasks into elegant, automated solutions? Let's dive into making your download automation dreams a reality!

cURL Basics: Getting Started With File Downloads

What is cURL?

cURL (Client URL) is like your command line's Swiss Army knife for transferring data. Think of it as a universal remote control for downloading files – whether they're sitting on a web server, FTP site, or even secure locations requiring authentication.

Why Use cURL for File Downloads? 5 Main Features

Before we dive into commands, let's understand why cURL stands out:

| Feature | Benefit | Real-World Application |

|---|---|---|

| Command-Line Power | Easily scriptable and automated | Perfect for batch processing large datasets |

| Resource Efficiency | Minimal system footprint | Great for server environments and server-side operations |

| Protocol Support | Handles HTTP(S), FTP, SFTP, and more | Download from any source |

| Advanced Features | Resume downloads, authentication, proxies | Great for handling complex scenarios |

| Universal Compatibility | Works across all major platforms | Consistent experience everywhere |

Pro Tip: If you're working with more complex web scraping tasks, you might want to check out our guide on what to do if your IP gets banned. It's packed with valuable insights that apply to cURL downloads as well.

Getting Started: 3-Step Prerequisites Setup

As we dive into the wonderful world of cURL file downloads, let's get your system ready for action. As someone who's spent countless hours on scripting and automation, I can't stress enough how important a proper setup is!

Step 1: Installing cURL

First things first - let's install cURL on your system. Don't worry, it's easier than making your morning coffee!

# For the Ubuntu/Debian gang

sudo apt install curl

# Running Fedora or Red Hat?

sudo dnf install curl

# CentOS/RHEL

sudo yum install curl

# Arch Linux

sudo pacman -S curl

After installation, want to make sure everything's working? Just run:

curl --version

If you see a version number, give yourself a high five! You're ready to roll!

Step 2: Installing Optional (But Super Helpful) Tools

In my years of experience handling massive download operations, I've found these additional tools to be absolute game-changers:

# Install Python3 and pip

sudo apt install python3 python3-pip

# Install requests library for API interactions

pip install requests

# JSON processing magic

sudo apt install jq

# HTML parsing powerhouse

sudo apt install html-xml-utils

Pro Tip: While these tools are great for local development, when you need to handle complex scenarios like JavaScript rendering or avoiding IP blocks, consider using our web scraping API. It handles all the heavy lifting for you!

Step 3: Setting Up Your Download Workspace

Let's set up a cozy space for all our future downloads with cURL:

mkdir ~/curl-downloads

cd ~/curl-downloads

And lastly, here's a little secret I learned the hard way - if you're planning to download files from HTTPS sites (who isn't these days?), make sure you have your certificates in order:

sudo apt install ca-certificates

Here's a quick checklist to ensure you're ready to roll:

| Component | Purpose | Why It's Important |

|---|---|---|

| cURL | Core download tool | Essential for all operations |

| Python + Requests | API interaction | Helpful for complex automation |

| jq | JSON processing | Makes handling API responses a breeze |

| CA Certificates | HTTPS support | Crucial for secure downloads |

Pro Tip: If you're planning to work with e-commerce sites or deal with large-scale downloads, check out our guide on scraping e-commerce websites. The principles there apply perfectly to file downloads too!

Now that we have our environment ready, let's dive into the exciting world of file downloads with cURL!

cURL Basic Syntax for Downloading Files

Ever tried to explain cURL commands to a colleague and felt like you were speaking an alien language? I've been there! Let's start with the absolute basics.

Here's your first and simplest cURL download command:

curl -O https://example.com/file.zip

But what's actually happening here? Let's understand the syntax:

curl [options] [URL]

Breaking this down:

curl- The command itself[options]- How you want to handle the download[URL]- Your target file's location

Pro Tip: If you're primarily interested in extracting data from websites that block automated access, I recommend checking out our web scraping API. While cURL is great for file downloads, our API excels at extracting structured data from web pages, handling all the complexity of proxies and browser rendering for you!

Ready to level up your cURL skills? Jump to:

- Advanced Techniques: Authentication & Proxies

- Batch Processing: Multiple Files Downloads

- Must-Have cURL Scripts

- cURL Troubleshooting Guide

7 Essential cURL Options (With a Bonus)

As someone who's automated downloads for everything from cat pictures to massive financial datasets, I've learned that mastering these essential commands is like getting your driver's license for the internet highway. Let's make them as friendly as that barista who knows your coffee order by heart!

Option 1: Simple File Download

Remember your first time riding a bike? Downloading files with cURL is just as straightforward (and with fewer scraped knees!). Here's your training wheels version:

curl -O https://example.com/awesome-file.pdf

But here's what I wish someone had told me years ago - the -O flag is like your responsible friend who remembers to save things with their original name. Trust me, it's saved me from having a downloads folder full of "download(1)", "download(2)", etc.!

Pro Tip: In my early days of automation, I once downloaded 1,000 files without the

-Oflag. My terminal turned into a modern art piece of binary data. Don't be like rookie me! 😅

Option 2: Specifying Output Filename

Sometimes, you want to be in charge of naming your downloads. I get it - I have strong opinions about file names too! Here's how to take control:

curl -o my-awesome-name.pdf https://example.com/boring-original-name.pdf

Think of -o as your personal file-naming assistant. Here's a real scenario from my data collection projects:

# Real-world example from our financial data scraping

curl -o "company_report_$(date +%Y%m%d).pdf" https://example.com/report.pdf

Pro Tip: When scraping financial reports for a client, I used this naming convention to automatically organize thousands of PDFs by date. The client called it "magical" - I call it smart cURL usage!

Option 3: Managing File Names and Paths

Here's something that took me embarrassingly long to learn - cURL can be quite the organized librarian when you want it to be:

# Create directory structure automatically

curl -o "./downloads/reports/2024/Q1/report.pdf" https://example.com/report.pdf --create-dirs

# Use content-disposition header (when available)

curl -OJ https://example.com/mystery-file

| Command Flag | What It Does | When to Use It |

|---|---|---|

--create-dirs | Creates missing directories | When organizing downloads into folders |

-J | Uses server's suggested filename | When you trust the source's naming |

--output-dir | Specifies download directory | For keeping downloads organized |

Pro Tip: I always add

--create-dirsto my download scripts now. One time, a missing directory caused a 3 AM alert because 1,000 files had nowhere to go. Never again!

Option 4: Handling Redirects

Remember playing "Follow the Leader" as a kid? Sometimes, files play the same game! Here's how to handle those sneaky redirects:

# Follow redirects like a pro

curl -L -O https://example.com/file-that-moves-around

# See where your file leads you

curl -IL https://example.com/mysterious-file

Here's a fun fact: I once tracked a file through 7 redirects before reaching its final destination. It was like a digital scavenger hunt!

Option 5: Silent Downloads

Ever needed to download files without all the terminal fanfare? Like a digital ninja, sometimes, stealth is key - especially when running automated scripts or dealing with logs:

# Complete silence (no progress or error messages)

curl -s -O https://example.com/quiet-file.zip

# Silent but shows errors (my personal favorite)

curl -sS -O https://example.com/important-file.zip

# Silent with custom error redirection

curl -s -O https://example.com/file.zip 2>errors.log

Pro Tip: When building our automated testing suite, silent downloads with error logging saved us from sifting through 50MB log files just to find one failed download. Now, that's what I call peace and quiet!

Option 6: Showing Progress Bars

Remember those old-school download managers with fancy progress bars? We can do better:

# Classic progress bar

curl -# -O https://example.com/big-file.zip

# Fancy progress meter with stats

curl --progress-bar -O https://example.com/big-file.zip

# Custom progress format (my favorite for scripting)

curl -O https://example.com/big-file.zip \

--progress-bar \

--write-out "\nDownload completed!\nAverage Speed: %{speed_download}bytes/sec\nTime: %{time_total}s\n"

| Progress Option | Use Case | Best For |

|---|---|---|

-# | Clean, simple progress | Quick downloads |

--progress-bar | Detailed progress | Large files |

--write-out | Custom statistics | Automation scripts |

Pro Tip: During a massive data migration project, I used custom progress formatting to create beautiful download reports. The client loved the professional touch, and it made tracking thousands of downloads a breeze!

Option 7: Resume Interrupted Downloads

Picture this: You're 80% through downloading a massive dataset, and your cat unplugs your router (true story!). Here's how to save your sanity:

# Resume a partial download

curl -C - -O https://example.com/massive-dataset.zip

# Check file size before resuming

curl -I https://example.com/massive-dataset.zip

# Resume with retry logic (battle-tested version)

curl -C - --retry 3 --retry-delay 5 -O https://example.com/massive-dataset.zip

Bonus: Resume Download Script

Here's my bulletproof download script that's saved me countless times:

#!/bin/bash

download_with_resume() {

local url=$1

local max_retries=3

local retry_count=0

while [ $retry_count -lt $max_retries ]; do

curl -C - --retry 3 --retry-delay 5 -O "$url"

if [ $? -eq 0 ]; then

echo "Download completed successfully! 🎉"

return 0

fi

let retry_count++

echo "Retry $retry_count of $max_retries... 🔄"

sleep 5

done

return 1

}

# Usage

download_with_resume "https://example.com/huge-file.zip"

You're welcome!

Pro Tip: A client was losing days of work when their downloads kept failing due to random power outages. This simple cURL resume trick now saves their entire operation. He called me a wizard - little did he know it was just a smart use of cURL's resume feature!

Putting It All Together

Here's my go-to command that combines the best of everything we've learned so far:

curl -C - \

--retry 3 \

--retry-delay 5 \

-# \

-o "./downloads/$(date +%Y%m%d)_${filename}" \

--create-dirs \

-L \

"https://example.com/file.zip"

Pro Tip: I save this as an alias in my .bashrc:

alias superdownload='function _dl() { curl -C - --retry 3 --retry-delay 5 -# -o "./downloads/$(date +%Y%m%d)_$2" --create-dirs -L "$1"; };_dl'

Now you can just use:

superdownload https://example.com/file.zip custom_name

These commands aren't just lines of code - they're solutions to real problems we've faced at ScrapingBee.

Your cURL Handbook: 6 Key Options

Let's be honest - it's easy to forget command options when you need them most! Here's your cheat sheet (feel free to screenshot this one - we won't tell!):

| Option | Description | Example |

|---|---|---|

-O | Save with original filename | curl -O https://example.com/file.zip |

-o | Save with custom filename | curl -o custom.zip https://example.com/file.zip |

-# | Show progress bar | curl -# -O https://example.com/file.zip |

-s | Silent mode | curl -s -O https://example.com/file.zip |

-I | Headers only | curl -I https://example.com/file.zip |

-f | Fail silently | curl -f -O https://example.com/file.zip |

Pro Tip: While building our web scraping API's data extraction features, we've found that mastering cURL's fundamentals can reduce complex download scripts to just a few elegant commands. Keep these options handy - your future self will thank you!

The key to mastering cURL isn't memorizing commands – it's understanding when and how to use each option effectively. Keep experimenting with these basic commands until they feel natural!

6 Advanced cURL Techniques: From Authentication to Proxy Magic (With a Bonus)

Remember when you first learned to ride a bike, and then someone showed you how to do a wheelie? That's what we're about to do with cURL!

Pro Tip: While the basic cURL commands we've discussed work great for simple downloads, when you're dealing with complex websites that have anti-bot protection, you might want to check out our guide on web scraping without getting blocked. The principles apply perfectly to file downloads too!

After years of building our web scraping API infrastructure and processing millions of requests, let me share our team's advanced techniques that go beyond basic cURL usage. Get ready for the cool stuff!

Technique 1: Handling Authentication and Secure Downloads

Ever tried getting into an exclusive club? Working with authenticated downloads is similar - you need the right credentials and know the secret handshake:

# Basic authentication (the classic way)

curl -u username:password -O https://secure-site.com/file.zip

# Using netrc file (my preferred method for automation)

echo "machine secure-site.com login myuser password mypass" >> ~/.netrc

chmod 600 ~/.netrc # Important security step!

curl -n -O https://secure-site.com/file.zip

Pro Tip: Need to handle complex login flows? Check out our guide on how to log in to almost any website, and if you hit any SSL issues during downloads (especially with self-signed certificates), our guide on what to do if your IP gets banned includes some handy troubleshooting tips!

Never hardcode credentials in your scripts! Here's what I use for sensitive downloads:

# Create a secure credential handler

curl -u "$(security find-generic-password -a $USER -s "api-access" -w)"

From my experience dealing with authenticated downloads in production environments, I always recommend using environment variables or secure credential managers. This approach has helped me maintain security while scaling operations.

Technique 2: Managing Cookies and Sessions

Sometimes, you need to maintain a session across multiple downloads. If you've ever worked with session-based scraping, you'll know the importance of cookie management:

# Save cookies

curl -c cookies.txt -O https://example.com/login-required-file.zip

# Use saved cookies

curl -b cookies.txt -O https://example.com/another-file.zip

# The power combo (save and use in one go)

curl -b cookies.txt -c cookies.txt -O https://example.com/file.zip

Here's a real-world script I use for handling session-based downloads:

#!/bin/bash

SESSION_HANDLER() {

local username="$1"

local password="$2"

local cookie_file=$(mktemp)

local max_retries=3

local retry_delay=2

# Input validation

if [[ -z "$username" || -z "$password" ]]; then

echo "❌ Error: Username and password are required"

rm -f "$cookie_file"

return 1

}

echo "🔐 Initiating secure session..."

# First, login and save cookies with error handling

if ! curl -s -c "$cookie_file" \

--connect-timeout 10 \

--max-time 30 \

--retry $max_retries \

--retry-delay $retry_delay \

--fail-with-body \

-d "username=${username}&password=${password}" \

"https://example.com/login" > /dev/null 2>&1; then

echo "❌ Login failed!"

rm -f "$cookie_file"

return 1

fi

echo "✅ Login successful"

# Verify cookie file existence and content

if [[ ! -s "$cookie_file" ]]; then

echo "❌ No cookies were saved"

rm -f "$cookie_file"

return 1

fi

# Now download with session

echo "📥 Downloading protected file..."

if ! curl -sS -b "$cookie_file" \

-O \

--retry $max_retries \

--retry-delay $retry_delay \

--connect-timeout 10 \

--max-time 300 \

"https://example.com/protected-file.zip"; then

echo "❌ Download failed"

rm -f "$cookie_file"

return 1

fi

echo "✅ Download completed successfully"

# Cleanup

rm -f "$cookie_file"

return 0

}

# Usage Example

SESSION_HANDLER "myusername" "mypassword"

Pro Tip: I once optimized a client's download process from 3 days to just 4 hours using these cookie management techniques!

Technique 3: Setting Custom Headers

Just as we handle headers in our web scraping API, here's how to make your cURL requests look legitimate and dress them up properly:

# Single header

curl -H "User-Agent: Mozilla/5.0" -O https://example.com/file.zip

# Multiple headers (production-ready version)

curl -H "User-Agent: Mozilla/5.0" \

-H "Accept: application/pdf" \

-H "Referer: https://example.com" \

-O https://example.com/file.pdf

Pro Tip: Through our experience with bypassing detection systems, we've found that proper header management can increase success rates by up to 70%!

Here's my battle-tested that implements smart header rotation and rate limiting:

#!/bin/bash

# Define browser headers

USER_AGENTS=(

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) Chrome/120.0.0.0"

"Mozilla/5.0 (Windows NT 10.0; Firefox/121.0)"

"Mozilla/5.0 (Macintosh; Safari/605.1.15)"

)

SMART_DOWNLOAD() {

local url="$1"

local output="$2"

# Select random User-Agent

local agent=${USER_AGENTS[$RANDOM % ${#USER_AGENTS[@]}]}

# Execute download with common headers

curl --fail \

-H "User-Agent: $agent" \

-H "Accept: text/html,application/xhtml+xml,*/*" \

-H "Accept-Language: en-US,en;q=0.9" \

-H "Connection: keep-alive" \

-o "$output" \

"$url"

# Be nice to servers

sleep 1

}

# Usage Example

SMART_DOWNLOAD "https://example.com/file.pdf" "downloaded.pdf"

Pro Tip: Add a small delay between requests to be respectful to servers. When dealing with multiple files, I typically randomize delays between 1-3 seconds.

Be nice to servers!

Technique 4: Using Proxies for Downloads

In the automation world, I've learned that sometimes, you need to be a bit sneaky (legally, of course!) with your downloads. Let me show you how to use how to use proxies with cURL:

# Basic proxy usage

curl -x proxy.example.com:8080 -O https://example.com/file.zip

# Authenticated proxy (my preferred setup)

curl -x "username:password@proxy.example.com:8080" \

-O https://example.com/file.zip

# SOCKS5 proxy (for extra sneakiness)

curl --socks5 proxy.example.com:1080 -O https://example.com/file.zip

Want to dive deeper? We've got detailed guides for both using proxies with cURL and using proxies with Wget - the principles are similar!

Pro Tip: While these proxy commands work great for basic file downloads, if you're looking to extract data from websites at scale, you might want to consider a more robust solution. At ScrapingBee, we've built our API with advanced proxy infrastructure specifically designed for web scraping and data extraction. Our customers regularly achieve 99.9% success rates when gathering data from even the most challenging websites.

Let's look at three battle-tested proxy scripts I use in production.

Smart Proxy Rotation

Let's start with my favorite proxy rotation setup. This bad boy has saved me countless times when dealing with IP-based rate limits. It not only rotates proxies but also tests them before use - because there's nothing worse than a dead proxy in production!

#!/bin/bash

# Proxy configurations with authentication

declare -A PROXY_CONFIGS=(

["proxy1"]="username1:password1@proxy1.example.com:8080"

["proxy2"]="username2:password2@proxy2.example.com:8080"

["proxy3"]="username3:password3@proxy3.example.com:8080"

)

PROXY_DOWNLOAD() {

local url="$1"

local output="$2"

local max_retries=3

local retry_delay=2

local timeout=30

local temp_log=$(mktemp)

# Input validation

if [[ -z "$url" ]]; then

echo "❌ Error: URL is required"

rm -f "$temp_log"

return 1

}

# Get proxy list keys

local proxy_keys=("${!PROXY_CONFIGS[@]}")

for ((retry=0; retry<max_retries; retry++)); do

# Select random proxy

local selected_proxy=${proxy_keys[$RANDOM % ${#proxy_keys[@]}]}

local proxy_auth="${PROXY_CONFIGS[$selected_proxy]}"

echo "🔄 Attempt $((retry + 1))/$max_retries using proxy: ${selected_proxy}"

# Test proxy before download

if curl --connect-timeout 5 \

-x "$proxy_auth" \

-s "https://api.ipify.org" > /dev/null 2>&1; then

echo "✅ Proxy connection successful"

# Attempt download with the working proxy

if curl -x "$proxy_auth" \

--connect-timeout "$timeout" \

--max-time $((timeout * 2)) \

--retry 2 \

--retry-delay "$retry_delay" \

--fail \

--silent \

--show-error \

-o "$output" \

"$url" 2> "$temp_log"; then

echo "✅ Download completed successfully using ${selected_proxy}"

rm -f "$temp_log"

return 0

else

echo "⚠️ Download failed with ${selected_proxy}"

cat "$temp_log"

fi

else

echo "⚠️ Proxy ${selected_proxy} is not responding"

fi

# Wait before trying next proxy

if ((retry < max_retries - 1)); then

local wait_time=$((retry_delay * (retry + 1)))

echo "⏳ Waiting ${wait_time}s before next attempt..."

sleep "$wait_time"

fi

done

echo "❌ All proxy attempts failed"

rm -f "$temp_log"

return 1

}

# Usage Example

PROXY_DOWNLOAD "https://example.com/file.zip" "downloaded_file.zip"

What makes this script special is its self-healing nature. If a proxy fails, it automatically tries another one. No more nighttime alerts because a single proxy went down!

SOCKS5 Power User

Now, when you need that extra layer of anonymity, SOCKS5 is your best friend. (And no, it's not the same as a VPN - check out our quick comparison of SOCKS5 and VPN if you're curious!)

Here's my go-to script when dealing with particularly picky servers that don't play nice with regular HTTP proxies:

#!/bin/bash

# SOCKS5 specific download function

SOCKS5_DOWNLOAD() {

local url="$1"

local output="$2"

local socks5_proxy="$3"

echo "🧦 Using SOCKS5 proxy: $socks5_proxy"

if curl --socks5 "$socks5_proxy" \

--connect-timeout 10 \

--max-time 60 \

--retry 3 \

--retry-delay 2 \

--fail \

--silent \

--show-error \

-o "$output" \

"$url"; then

echo "✅ SOCKS5 download successful"

return 0

else

echo "❌ SOCKS5 download failed"

return 1

fi

}

# Usage Example

SOCKS5_DOWNLOAD "https://example.com/file.zip" "output.zip" "proxy.example.com:1080"

Pro Tip: While there are free SOCKS5 proxies available, for serious automation work, I highly recommend using reliable, paid proxies. Your future self will thank you!

The beauty of this setup is its reliability. With built-in retries and proper timeout handling, it's perfect for those long-running download tasks where failure is not an option!

Batch Proxy Manager

Finally, here's the crown jewel - a batch download manager that combines our proxy magic with parallel processing. This is what I use when I need to download thousands of files without breaking a sweat:

#!/bin/bash

# Batch download with proxy rotation

BATCH_PROXY_DOWNLOAD() {

local -a urls=("$@")

local success_count=0

local fail_count=0

echo "📦 Starting batch download with proxy rotation..."

for url in "${urls[@]}"; do

local filename="${url##*/}"

if PROXY_DOWNLOAD "$url" "$filename"; then

((success_count++))

else

((fail_count++))

echo "⚠️ Failed to download: $url"

fi

done

echo "📊 Download Summary:"

echo "✅ Successful: $success_count"

echo "❌ Failed: $fail_count"

}

# Usage Example

BATCH_PROXY_DOWNLOAD "https://example.com/file1.zip" "https://example.com/file2.zip"

This script has been battle-tested with tons of downloads. The success/failure tracking has saved me hours of debugging - you'll always know exactly what failed and why!

Pro Tip: Always test your proxies before a big download job. A failed proxy after hours of downloading can be devastating! This is why our scripts include proxy testing and automatic rotation.

Technique 5: Implementing Smart Rate Limiting and Bandwidth Control

Remember that friend who always ate all the cookies? Don't be that person with servers! Just like how we automatically handle rate limiting in our web scraping API, here's how to be a considerate downloader:

# Limit download speed (1M = 1MB/s)

curl --limit-rate 1M -O https://example.com/huge-file.zip

# Bandwidth control with retry logic (500KB/s limit)

curl --limit-rate 500k \

--retry 3 \

--retry-delay 5 \

-C - \

-O https://example.com/huge-file.zip

Simple but effective! The -C - flag is especially crucial as it enables resume capability - your download won't start from scratch if it fails halfway!

Adaptive Rate Controller

Now, here's where it gets interesting. This next script is like a smart throttle - it automatically adjusts download speed based on server response. I've used this to download terabytes of data without a single complaint from servers:

#!/bin/bash

adaptive_download() {

local url="$1"

local output="$2"

local base_rate="500k"

local retry_count=0

local max_retries=3

local backoff_delay=5

while [ $retry_count -lt $max_retries ]; do

if curl --limit-rate $base_rate \

--connect-timeout 10 \

--max-time 3600 \

-o "$output" \

-C - \

"$url"; then

echo "✅ Download successful!"

return 0

else

base_rate="250k" # Reduce speed on failure

let retry_count++

echo "⚠️ Retry $retry_count with reduced speed: $base_rate"

sleep $((backoff_delay * retry_count))

fi

done

return 1

}

# Usage Example

adaptive_download "https://example.com/huge-file.zip" "my-download.zip"

The magic here is in the automatic speed adjustment. If the server starts struggling, we back off automatically. It's like having a sixth sense for server load!

Pro Tip: In my years of web scraping, I've found that smart rate limiting isn't just polite - it's crucial for reliable data collection. While these bash scripts work great for file downloads, if you're looking to extract data at scale from websites, I'd recommend checking out our web scraping API. We've built intelligent rate limiting into our infrastructure, helping thousands of customers gather web data reliably without getting blocked!

Technique 6: Debugging Like a Pro

When things go wrong, use these debugging approaches I've used while working with several clients:

exec 1> >(tee -a "${LOG_DIR}/download_$(date +%Y%m%d).log")

exec 2>&1

Sometimes, even the best downloads with advanced methods can fail. Here's more debugging checklist:

Checking SSL/TLS Issues:

curl -v --tlsv1.2 -O https://example.com/file.zip

Verifying Server Response:

curl -I --proxy-insecure https://example.com/file.zip

Testing Connection:

curl -v --proxy proxy.example.com:8080 https://example.com/file.zip >/dev/null

Your cURL Debugging Handbook

| Debug Level | Command | Use Case |

|---|---|---|

| Basic | -v | General troubleshooting |

| Detailed | -vv | Header analysis |

| Complete | -vvv | Full connection debugging |

| Headers Only | -I | Quick response checking |

Pro Tip: From my experience, the best debugging is proactive, and sometimes, the problem isn't your code at all! That's why we built our Screenshot API to help you verify downloads visually before even starting your automation!

Bonus: The Ultimate Power User Setup

After years of trial and error, here's my ultimate cURL download configuration that combines all these tips into a single script:

#!/bin/bash

SMART_DOWNLOAD() {

local url="$1"

local output_name="$2"

local proxy="${PROXY_LIST[RANDOM % ${#PROXY_LIST[@]}]}"

curl -x "$proxy" \

--limit-rate 1M \

--retry 3 \

--retry-delay 5 \

-C - \

-# \

-H "User-Agent: ${USER_AGENTS[RANDOM % ${#USER_AGENTS[@]}]}" \

-b cookies.txt \

-c cookies.txt \

--create-dirs \

-o "./downloads/$(date +%Y%m%d)_${output_name}" \

"$url"

}

# Usage Examples

# Single file download with all the bells and whistles

SMART_DOWNLOAD "https://example.com/dataset.zip" "important_dataset.zip"

# Multiple files

for file in file1.zip file2.zip file3.zip; do

SMART_DOWNLOAD "https://example.com/$file" "$file"

sleep 2 # Be nice to servers

done

This script has literally saved my bacon on numerous occasions. The date-based organization alone has saved me hours of file hunting. Plus, with the progress bar (-#), you'll never wonder if your download is still alive!

Looking to Scale Your Web Data Collection?

While these cURL techniques are powerful for file downloads, if you're looking to extract data from websites at scale, you'll need a more specialized solution. That's why we built our web scraping API – it handles all the complexities of data extraction automatically:

- Intelligent proxy rotation

- Smart rate limiting

- Automatic retry mechanisms

- Built-in JavaScript rendering

- Advanced header management, and lots more...

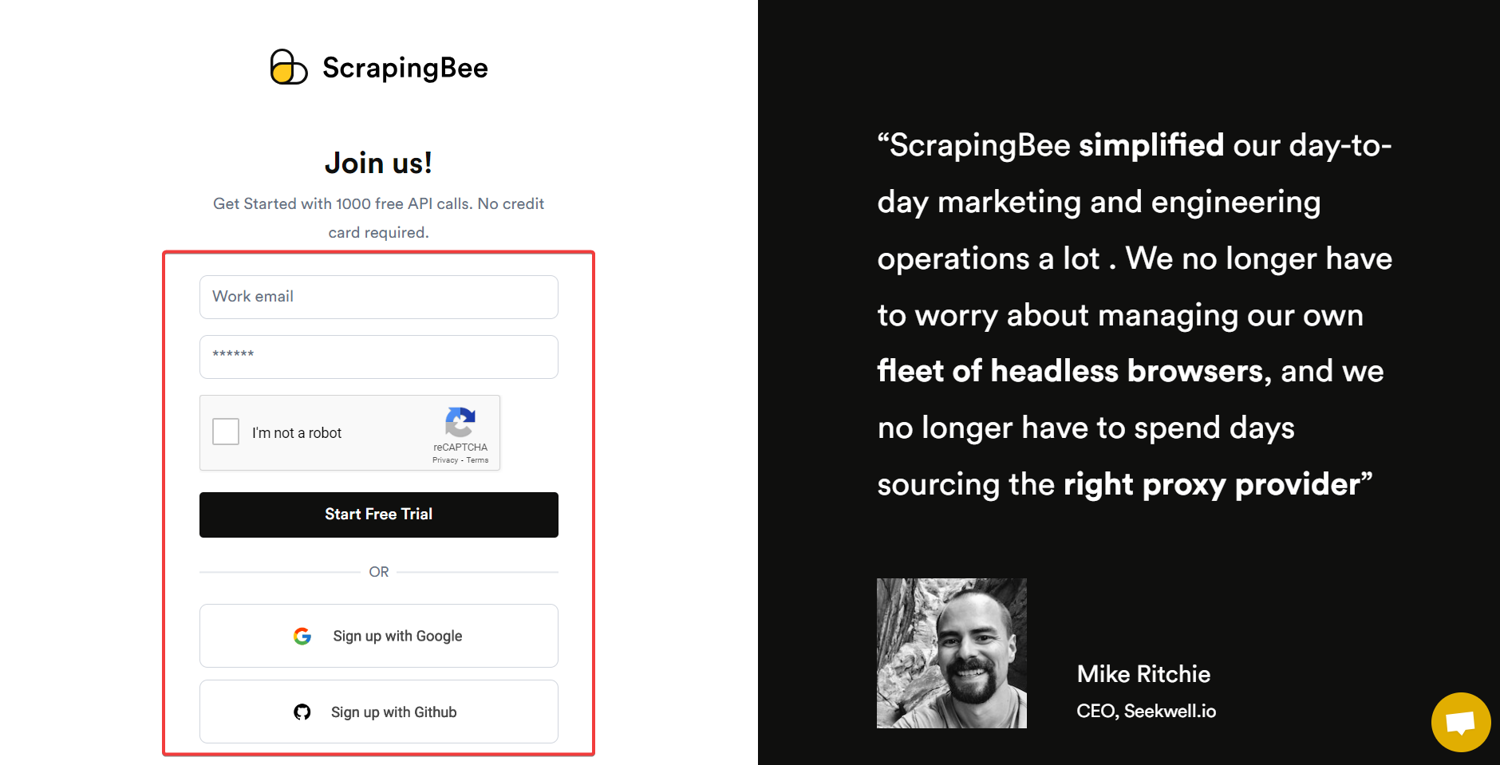

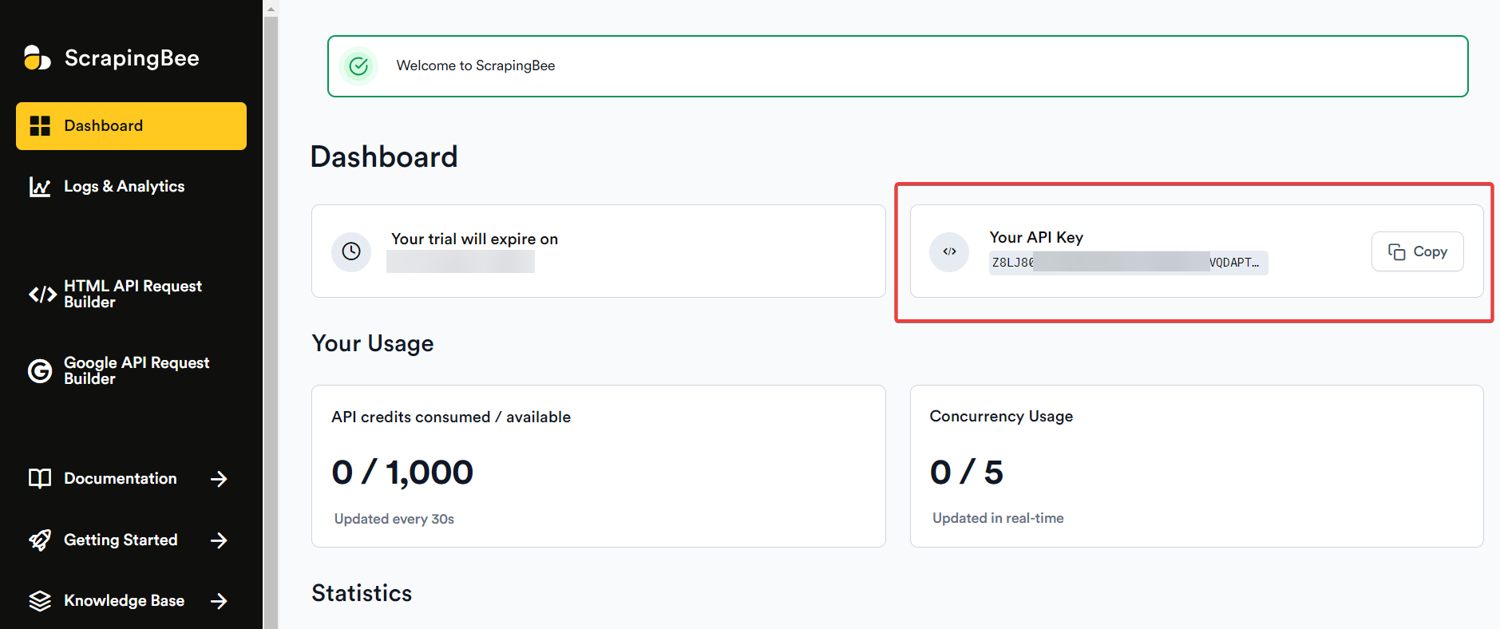

Ready to supercharge your web data collection?

- Try our API with 1000 free credits, no credit card required!

- Read our journey to processing millions of requests

- Check out our technical documentation

5 cURL Batch Processing Strategies: Multi-File Downloads (With a Bonus)

Remember playing Tetris and getting that satisfying feeling when all the pieces fit perfectly? That's what a well-executed batch download feels like!

At ScrapingBee, batch downloading is a crucial part of our infrastructure. Here's what we've learned from processing millions of files daily!

Strategy 1: Downloading Multiple Files

First, let's start with the foundation. Just like how we designed our web scraping API to handle concurrent requests, here's how to manage multiple downloads with cURL efficiently without breaking a sweat:

# From a file containing URLs (my most-used method)

while read url; do

curl -O "$url"

done < urls.txt

# Multiple files from same domain (clean and simple)

# Downloads: file1.pdf, file2.pdf, file3.pdf

curl -O http://example.com/file[1-100].pdf

Pro Tip: I once had to download over 50,000 product images for an e-commerce client. The simple, naive approach failed miserably. Here's the secret behind that success:

#!/bin/bash

BATCH_DOWNLOAD() {

local url="$1"

local retries=3

local wait_time=2

echo "🎯 Downloading: $url"

for ((i=1; i<=retries; i++)); do

if curl -sS --fail \

--retry-connrefused \

--connect-timeout 10 \

--max-time 300 \

-O "$url"; then

echo "✅ Success: $url"

return 0

else

echo "⚠️ Attempt $i failed, waiting ${wait_time}s..."

sleep $wait_time

wait_time=$((wait_time * 2)) # Exponential backoff

fi

done

echo "❌ Failed after $retries attempts: $url"

return 1

}

# Usage Example

BATCH_DOWNLOAD "https://example.com/large-file.zip"

# Or in a loop:

while read url; do

BATCH_DOWNLOAD "$url"

done < urls.txt

Strategy 2: Using File/URL List Processing

Here's my battle-tested approach for handling URL lists:

#!/bin/bash

PROCESS_URL_LIST() {

trap 'echo "⚠️ Process interrupted"; exit 1' SIGINT SIGTERM

local input_file="$1"

local success_count=0

local total_urls=$(wc -l < "$input_file")

echo "🚀 Starting batch download of $total_urls files..."

while IFS='' read -r url || [[ -n "$url" ]]; do

if [[ "$url" =~ ^#.*$ ]] || [[ -z "$url" ]]; then

continue # Skip comments and empty lines

fi

if BATCH_DOWNLOAD "$url"; then

((success_count++))

printf "Progress: [%d/%d] (%.2f%%)\n" \

$success_count $total_urls \

$((success_count * 100 / total_urls))

fi

done < "$input_file"

echo "✨ Download complete! Success rate: $((success_count * 100 / total_urls))%"

}

Pro Tip: When dealing with large-scale scraping, proper logging is crucial. Always keep track of failed downloads! Here's my logging addition:

# Add to the script above

failed_urls=()

if ! BATCH_DOWNLOAD "$url"; then

failed_urls+=("$url")

echo "$url" >> failed_downloads.txt

fi

Strategy 3: Advanced Recursive Downloads

Whether you're scraping e-commerce sites like Amazon or downloading entire directories, here's how to do it right:

# Basic recursive download

curl -r -O 'http://example.com/files/{file1,file2,file3}.pdf'

# Advanced recursive download with pattern matching

Strategy 4: Parallel Downloads and Processing

Remember trying to download multiple files one by one? Snooze fest! Sometimes, you might need to download files in parallel like a pro:

#!/bin/bash

PARALLEL_DOWNLOAD() {

trap 'kill $(jobs -p) 2>/dev/null; exit 1' SIGINT SIGTERM

local max_parallel=5 # Adjust based on your needs

local active_downloads=0

while read -r url; do

# Check if we've hit our parallel limit

while [ $active_downloads -ge $max_parallel ]; do

wait -n # Wait for any child process to finish

((active_downloads--))

done

# Start new download in background

(

if curl -sS --fail -O "$url"; then

echo "✅ Success: $url"

else

echo "❌ Failed: $url"

echo "$url" >> failed_urls.txt

fi

) &

((active_downloads++))

echo "🚀 Started download: $url (Active: $active_downloads)"

done < urls.txt

# Wait for remaining downloads

wait

}

# Usage Example

echo "https://example.com/file1.zip

https://example.com/file2.zip" > urls.txt

PARALLEL_DOWNLOAD

At a task I once handled for a client, this parallel approach reduced a 4-hour download job to just 20 minutes! But be careful - here's my smart throttling addition:

# Add dynamic throttling based on failure rate

SMART_PARALLEL_DOWNLOAD() {

local fail_count=0

local total_count=0

local max_parallel=5

monitor_failures() {

if [ $((total_count % 10)) -eq 0 ]; then

local failure_rate=$((fail_count * 100 / total_count))

if [ $failure_rate -gt 20 ]; then

((max_parallel--))

echo "⚠️ High failure rate detected! Reducing parallel downloads to $max_parallel"

fi

fi

}

# ... rest of parallel download logic

}

Pro Tip: While parallel downloads with cURL are powerful, I've learned through years of web scraping that smart throttling is crucial for any kind of web automation. If you're looking to extract data from websites at scale, our web scraping API handles intelligent request throttling automatically, helping you gather web data reliably without getting blocked.

Strategy 5: Production-Grade Error Handling

Drawing from our in-depth experience building anti-blocking solutions when scraping, here's our robust error-handling system:

#!/bin/bash

DOWNLOAD_WITH_ERROR_HANDLING() {

trap 'rm -f "$temp_file"' EXIT

local url="$1"

local retry_count=0

local max_retries=3

local backoff_time=5

local temp_file=$(mktemp)

while [ $retry_count -lt $max_retries ]; do

if curl -sS \

--fail \

--connect-timeout 15 \

--max-time 300 \

--retry 3 \

--retry-delay 5 \

-o "$temp_file" \

"$url"; then

# Verify file integrity

if [ -s "$temp_file" ]; then

mv "$temp_file" "$(basename "$url")"

echo "✅ Download successful: $url"

return 0

else

echo "⚠️ Downloaded file is empty"

fi

fi

((retry_count++))

echo "🔄 Retry $retry_count/$max_retries for $url"

sleep $((backoff_time * retry_count)) # Exponential backoff

done

rm -f "$temp_file"

return 1

}

# Usage Example

DOWNLOAD_WITH_ERROR_HANDLING "https://example.com/large-file.dat"

Pro Tip: Always implement these three levels of error checking:

- HTTP status codes

- File integrity

- Content validation

Bonus: The Ultimate Batch Download Solution With cURL

Here's my masterpiece - a complete solution that combines all these strategies:

#!/bin/bash

MASTER_BATCH_DOWNLOAD() {

set -eo pipefail # Exit on error

trap 'kill $(jobs -p) 2>/dev/null; echo "⚠️ Process interrupted"; exit 1' SIGINT SIGTERM

local url_file="$1"

local max_parallel=5

local success_count=0

local fail_count=0

# Setup logging

local log_dir="logs/$(date +%Y%m%d_%H%M%S)"

mkdir -p "$log_dir"

exec 1> >(tee -a "${log_dir}/download.log")

exec 2>&1

echo "🚀 Starting batch download at $(date)"

# Process URLs in parallel with smart throttling

cat "$url_file" | while read -r url; do

while [ $(jobs -p | wc -l) -ge $max_parallel ]; do

wait -n

done

(

if DOWNLOAD_WITH_ERROR_HANDLING "$url"; then

echo "$url" >> "${log_dir}/success.txt"

((success_count++))

else

echo "$url" >> "${log_dir}/failed.txt"

((fail_count++))

fi

# Progress update

total=$((success_count + fail_count))

echo "Progress: $total files processed (Success: $success_count, Failed: $fail_count)"

) &

done

wait # Wait for all downloads to complete

# Generate report

echo "

📊 Download Summary

==================

Total Files: $((success_count + fail_count))

Successful: $success_count

Failed: $fail_count

Success Rate: $((success_count * 100 / (success_count + fail_count)))%

Log Location: $log_dir

==================

"

}

# Usage Example

echo "https://example.com/file1.zip

https://example.com/file2.zip

https://example.com/file3.zip" > batch_urls.txt

# Execute master download

MASTER_BATCH_DOWNLOAD "batch_urls.txt"

Pro Tip: This approach partially mirrors how we handle large-scale data extraction with our API, consistently achieving impressive success rates even with millions of requests. The secret? Smart error handling and parallel processing!

Batch downloading isn't just about grabbing multiple files - it's about doing it reliably, efficiently, and with proper error handling.

Beyond Downloads: Managing Web Data Collection at Scale

While these cURL scripts work well for file downloads, collecting data from websites at scale brings additional challenges:

- IP rotation needs

- Anti-bot bypassing

- Bandwidth management

- Server-side restrictions

That's why we built our web scraping API to handle web data extraction automatically. Whether you're gathering product information, market data, or other web content, we've got you covered. Want to learn more? Try our API with 1000 free credits, or check out these advanced guides:

5 Must-Have cURL Download Scripts

Theory is great, but you know what's better? Battle-tested scripts that actually work in production! After years of handling massive download operations, I've compiled some of my most reliable scripts. Here you go!

Script 1: Image Batch Download

Ever needed to download thousands of images without losing your sanity? Here's my script that handles everything from retry logic to file type validation:

#!/bin/bash

IMAGE_BATCH_DOWNLOAD() {

local url_list="$1"

local output_dir="images/$(date +%Y%m%d)"

local log_dir="logs/$(date +%Y%m%d)"

local max_size=$((10*1024*1024)) # 10MB limit by default

# Setup directories

mkdir -p "$output_dir" "$log_dir"

# Initialize counters

declare -A stats=([success]=0 [failed]=0 [invalid]=0 [oversized]=0)

# Cleanup function

cleanup() {

local exit_code=$?

rm -f "$temp_file" 2>/dev/null

echo "🧹 Cleaning up temporary files..."

exit $exit_code

}

# Set trap for cleanup

trap cleanup EXIT INT TERM

validate_image() {

local file="$1"

local mime_type=$(file -b --mime-type "$file")

local file_size=$(stat -f%z "$file" 2>/dev/null || stat -c%s "$file")

# Check file size

if [ "$file_size" -gt "$max_size" ]; then

((stats[oversized]++))

echo "⚠️ File too large: $file_size bytes (max: $max_size bytes)"

return 1

}

case "$mime_type" in

image/jpeg|image/png|image/gif|image/webp)

return 0

;;

*)

return 1

;;

esac

}

download_image() {

local url="$1"

local filename=$(basename "$url")

local temp_file=$(mktemp)

echo "🎯 Downloading: $url"

if curl -sS --fail \

--retry 3 \

--retry-delay 2 \

--max-time 30 \

-o "$temp_file" \

"$url"; then

if validate_image "$temp_file"; then

mv "$temp_file" "$output_dir/$filename"

((stats[success]++))

echo "✅ Success: $filename"

return 0

else

rm "$temp_file"

((stats[invalid]++))

echo "⚠️ Invalid image type or size: $url"

return 1

fi

else

rm "$temp_file"

((stats[failed]++))

echo "❌ Download failed: $url"

return 1

fi

}

}

# Usage Examples

# Create a file with image URLs

echo "https://example.com/image1.jpg

https://example.com/image2.png" > url_list.txt

# Execute with default 10MB limit

IMAGE_BATCH_DOWNLOAD "url_list.txt"

# Or modify max_size variable for different limit

max_size=$((20*1024*1024)) IMAGE_BATCH_DOWNLOAD "url_list.txt" # 20MB limit

Perfect for data journalism. Need to scale up your image downloads? While this script works great, check out our guides on scraping e-commerce product data or downloading images with Python for other related solutions!

Pro Tip: While using cURL for image downloads works great, when you need to handle dynamic image loading or deal with anti-bot protection, consider using our Screenshot API. It's perfect for capturing images that require JavaScript rendering!

Script 2: Enterprise API Data Handler

New to APIs? Start with our API for Dummies guide - it'll get you up to speed fast! Now, here's my go-to script for handling authenticated API downloads with rate limiting and token refresh:

#!/bin/bash

API_DATA_DOWNLOAD() {

local api_base="https://api.example.com"

local token=""

local rate_limit=60 # requests per minute

local last_token_refresh=0

local log_file="api_downloads.log"

local max_retries=3

log_message() {

local timestamp=$(date '+%Y-%m-%d %H:%M:%S')

echo "[$timestamp] $1" | tee -a "$log_file"

}

refresh_token() {

local refresh_response

refresh_response=$(curl -sS \

-X POST \

-H "Content-Type: application/json" \

--max-time 30 \

-d '{"key": "YOUR_API_KEY"}' \

"${api_base}/auth")

if [ $? -ne 0 ]; then

log_message "❌ Token refresh failed: Network error"

return 1

fi

token=$(echo "$refresh_response" | jq -r '.token')

if [ -z "$token" ] || [ "$token" = "null" ]; then

log_message "❌ Token refresh failed: Invalid response"

return 1

}

last_token_refresh=$(date +%s)

log_message "✅ Token refreshed successfully"

return 0

}

calculate_backoff() {

local retry_count=$1

echo $((2 ** (retry_count - 1) * 5)) # Exponential backoff: 5s, 10s, 20s...

}

download_data() {

local endpoint="$1"

local output_file="$2"

local retry_count=0

local success=false

# Check token age

local current_time=$(date +%s)

if [ $((current_time - last_token_refresh)) -gt 3600 ]; then

log_message "🔄 Token expired, refreshing..."

refresh_token || return 1

fi

# Rate limiting

sleep $(( 60 / rate_limit ))

while [ $retry_count -lt $max_retries ] && [ "$success" = false ]; do

local response

response=$(curl -sS \

-H "Authorization: Bearer $token" \

-H "Accept: application/json" \

--max-time 30 \

"${api_base}${endpoint}")

if [ $? -eq 0 ] && [ "$(echo "$response" | jq -e 'type')" = "object" ]; then

echo "$response" > "$output_file"

log_message "✅ Successfully downloaded data to $output_file"

success=true

else

retry_count=$((retry_count + 1))

if [ $retry_count -lt $max_retries ]; then

local backoff_time=$(calculate_backoff $retry_count)

log_message "⚠️ Attempt $retry_count failed. Retrying in ${backoff_time}s..."

sleep $backoff_time

else

log_message "❌ Download failed after $max_retries attempts"

return 1

fi

fi

done

return 0

}

}

# Usage Examples

# Initialize the function

API_DATA_DOWNLOAD

# Download data from specific endpoint

download_data "/v1/users" "users_data.json"

# Download with custom rate limit

rate_limit=30 download_data "/v1/transactions" "transactions.json"

This script implements REST API best practices and similar principles we use in our web scraping API for handling authentication and rate limits.

Script 3: Reliable FTP Download and Operations

Working with FTP might feel old school, but it's still crucial for many enterprises. Here's my bulletproof FTP download script that's saved countless legacy migrations:

#!/bin/bash

FTP_BATCH_DOWNLOAD() {

local host="$1"

local user="$2"

local pass="$3"

local remote_dir="$4"

local local_dir="downloads/ftp/$(date +%Y%m%d)"

local log_file="$local_dir/ftp_transfer.log"

local netrc_file

local status=0

# Create secure temporary .netrc file

netrc_file=$(mktemp)

log_message() {

local timestamp=$(date '+%Y-%m-%d %H:%M:%S')

echo "[$timestamp] $1" | tee -a "$log_file"

}

cleanup() {

local exit_code=$?

if [ -f "$netrc_file" ]; then

shred -u "$netrc_file" 2>/dev/null || rm -P "$netrc_file" 2>/dev/null || rm "$netrc_file"

fi

log_message "🧹 Cleanup completed"

exit $exit_code

}

validate_downloads() {

local failed_files=0

log_message "🔍 Validating downloaded files..."

while IFS= read -r file; do

if [ ! -s "$file" ]; then

log_message "⚠️ Empty or invalid file: $file"

((failed_files++))

fi

done < <(find "$local_dir" -type f -not -name "*.log")

return $failed_files

}

# Set trap for cleanup

trap cleanup EXIT INT TERM

# Create directories

mkdir -p "$local_dir"

# Create .netrc with secure permissions

umask 077

echo "machine $host login $user password $pass" > "$netrc_file"

log_message "🚀 Starting FTP download from $host..."

log_message "📁 Remote directory: $remote_dir"

# Download with enhanced options

curl --retry 3 \

--retry-delay 10 \

--retry-all-errors \

--ftp-create-dirs \

--create-dirs \

--connect-timeout 30 \

--max-time 3600 \

-C - \

--netrc-file "$netrc_file" \

--stderr - \

--progress-bar \

"ftp://$host/$remote_dir/*" \

--output "$local_dir/#1" 2>&1 | tee -a "$log_file"

status=$?

if [ $status -eq 0 ]; then

# Validate downloads

validate_downloads

local validation_status=$?

if [ $validation_status -eq 0 ]; then

log_message "✅ FTP download completed successfully!"

else

log_message "⚠️ Download completed but $validation_status files failed validation"

status=1

fi

else

log_message "❌ FTP download failed with status: $status"

fi

return $status

}

# Usage Example

FTP_BATCH_DOWNLOAD "ftp.example.com" "username" "password" "remote/directory"

# With error handling

if FTP_BATCH_DOWNLOAD "ftp.example.com" "username" "password" "remote/directory"; then

echo "Transfer successful"

else

echo "Transfer failed"

fi

Pro Tip: I recently helped a client migrate 5 years of legacy FTP data using similar principles from this script. The secret? Proper resume handling and secure credential management, as we have seen.

Script 4: Large File Download Manager

Ever tried downloading a massive file only to have it fail at 99%? Or maybe you've watched that progress bar crawl for hours, praying your connection doesn't hiccup? This script is your new best friend - it handles those gigantic downloads that make regular scripts run away screaming:

#!/bin/bash

LARGE_FILE_DOWNLOAD() {

local url="$1"

local filename="$2"

local min_speed=1000 # 1KB/s minimum

local timeout=300 # 5 minutes

local chunk_size="10M"

echo "🎯 Starting large file download: $filename"

# Create temporary directory for chunks

local temp_dir=$(mktemp -d)

local final_file="downloads/large/$filename"

mkdir -p "$(dirname "$final_file")"

download_chunk() {

local start=$1

local end=$2

local chunk_file="$temp_dir/chunk_${start}-${end}"

curl -sS \

--range "$start-$end" \

--retry 3 \

--retry-delay 5 \

--speed-limit $min_speed \

--speed-time $timeout \

-o "$chunk_file" \

"$url"

return $?

}

# Get file size

local size=$(curl -sI "$url" | grep -i content-length | awk '{print $2}' | tr -d '\r')

local chunks=$(( (size + (1024*1024*10) - 1) / (1024*1024*10) ))

echo "📦 File size: $(( size / 1024 / 1024 ))MB, Split into $chunks chunks"

# Download chunks in parallel

for ((i=0; i<chunks; i++)); do

local start=$((i * 1024 * 1024 * 10))

local end=$(( (i + 1) * 1024 * 1024 * 10 - 1 ))

if [ $end -ge $size ]; then

end=$((size - 1))

fi

(download_chunk $start $end) &

# Limit parallel downloads

if [ $((i % 5)) -eq 0 ]; then

wait

fi

done

wait # Wait for all chunks

# Combine chunks

echo "🔄 Combining chunks..."

cat "$temp_dir"/chunk_* > "$final_file"

# Verify file size

local downloaded_size=$(stat --format=%s "$final_file" 2>/dev/null || stat -f %z "$final_file")

if [ "$downloaded_size" -eq "$size" ]; then

echo "✅ Download complete and verified: $filename"

rm -rf "$temp_dir"

return 0

else

echo "❌ Size mismatch! Expected: $size, Got: $downloaded_size"

rm -rf "$temp_dir"

return 1

fi

}

# Usage Example

LARGE_FILE_DOWNLOAD "https://example.com/huge.zip" "backup.zip"

Here's why it's special: it splits large files into manageable chunks, downloads them in parallel, and even verifies the final file - all while handling network hiccups like a champ! Perfect for handling substantial datasets - and hey, if you're processing them in spreadsheets, we've got guides for both Excel lovers and Google Sheets fans!

Pro Tip: When downloading large files, always implement these three features:

- Chunk-based downloading (allows for better resume capabilities)

- Size verification (prevents corruption)

- Minimum speed requirements (detects stalled downloads)

Script 5: Progress Monitoring and Reporting

When downloading large files, flying blind isn't an option. I learned this the hard way during a massive dataset download that took hours - with no way to know if it was still working! Here's my battle-tested progress monitoring solution that I use in production:

#!/bin/bash

monitor_progress() {

local file="$1"

local total_size="$2"

local timeout="${3:-3600}" # Default 1 hour timeout

local start_time=$(date +%s)

local last_size=0

local last_check_time=$start_time

# Function to format sizes

format_size() {

local size=$1

if [ $size -ge $((1024*1024*1024)) ]; then

printf "%.1fGB" $(echo "scale=1; $size/1024/1024/1024" | bc)

elif [ $size -ge $((1024*1024)) ]; then

printf "%.1fMB" $(echo "scale=1; $size/1024/1024" | bc)

else

printf "%.1fKB" $(echo "scale=1; $size/1024" | bc)

fi

}

# Function to draw progress bar

draw_progress_bar() {

local percentage=$1

local width=50

local completed=$((percentage * width / 100))

local remaining=$((width - completed))

printf "["

printf "%${completed}s" | tr " " "="

printf ">"

printf "%${remaining}s" | tr " " " "

printf "] "

}

# Check if file exists

if [ ! -f "$file" ]; then

echo "❌ Error: File '$file' not found!"

return 1

}

# Check if total size is valid

if [ $total_size -le 0 ]; then

echo "❌ Error: Invalid total size!"

return 1

}

echo "🚀 Starting progress monitoring..."

# Loop to continuously check the file size

while true; do

# Get the current file size

local current_size=$(stat --format=%s "$file" 2>/dev/null || stat -f %z "$file" 2>/dev/null || echo 0)

local current_time=$(date +%s)

# Calculate time elapsed

local elapsed=$((current_time - start_time))

# Check timeout

if [ $elapsed -gt $timeout ]; then

echo -e "\n⚠️ Monitoring timed out after $(($timeout/60)) minutes"

return 1

}

# Calculate speed and ETA

local time_diff=$((current_time - last_check_time))

local size_diff=$((current_size - last_size))

if [ $time_diff -gt 0 ]; then

local speed=$((size_diff / time_diff))

local remaining_size=$((total_size - current_size))

local eta=$((remaining_size / speed))

fi

# Calculate percentage

local percentage=0

if [ $total_size -gt 0 ]; then

percentage=$(( (current_size * 100) / total_size ))

fi

# Clear line and show progress

echo -ne "\r\033[K"

# Draw progress bar

draw_progress_bar $percentage

# Show detailed stats

printf "%3d%% " $percentage

printf "$(format_size $current_size)/$(format_size $total_size) "

if [ $speed ]; then

printf "@ $(format_size $speed)/s "

if [ $eta -gt 0 ]; then

printf "ETA: %02d:%02d " $((eta/60)) $((eta%60))

fi

fi

# Check if download is complete

if [ "$current_size" -ge "$total_size" ]; then

echo -e "\n✅ Download complete! Total time: $((elapsed/60))m $((elapsed%60))s"

return 0

fi

# Update last check values

last_size=$current_size

last_check_time=$current_time

# Wait before next check

sleep 1

done

}

# Usage Examples

# Basic usage (monitoring a 100MB download)

monitor_progress "downloading_file.zip" 104857600

# With custom timeout (2 hours)

monitor_progress "large_file.iso" 1073741824 7200

# Integration with curl

curl -o "download.zip" "https://example.com/file.zip" &

monitor_progress "download.zip" $(curl -sI "https://example.com/file.zip" | grep -i content-length | awk '{print $2}' | tr -d '\r')

Pro Tip: When handling large-scale downloads, consider implementing the same proxy rotation principles we discuss in our guide about what to do if your IP gets banned.

These scripts aren't just theory - they're battle-tested solutions that have handled terabytes of data for us at ScrapingBee.

Beyond These Scripts: Enterprise-Scale Web Data Collection

While these scripts are great for file downloads, enterprise-scale web data collection often needs more specialized solutions. At ScrapingBee, we've built our web scraping API to handle complex data extraction scenarios automatically:

- Smart Rate Limiting: Intelligent request management with automatic proxy rotation

- Data Validation: Advanced parsing and extraction capabilities

- Error Handling: Enterprise-grade retry logic and status reporting

- Scalability: Processes millions of data extraction requests daily

- AI Powered Web Scraping Easily extract data from webpages without using selectors, lowering scraper maintance costs

| Feature | Basic cURL | Our Web Scraping API |

|---|---|---|

| Proxy Rotation | Manual | Automatic |

| JS Rendering | Not Available | Built-in |

| Anti-Bot Bypass | Limited | Advanced |

| HTML Parsing | Basic | Comprehensive |

Ready to level up your web data collection?

- Start with 1000 free API calls - no credit card needed!

- Check out our comprehensive documentation

- Learn from our JavaScript scenario features for complex automation

3 cURL Best Practices and Production-Grade Download Optimization (With a Bonus)

After spending a lot of time optimizing downloads, I've learned that the difference between a good download script and a great one often comes down to these battle-tested practices.

Tip 1: Performance Optimization Techniques

Just as we've optimized our web scraping API for speed, here's how to turbocharge your downloads with cURL:

# 1. Enable compression (huge performance boost!)

curl --compressed -O https://example.com/file.zip

# 2. Use keepalive connections

curl --keepalive-time 60 -O https://example.com/file.zip

# 3. Optimize DNS resolution

curl --resolve example.com:443:1.2.3.4 -O https://example.com/file.zip

| Optimization | Impact | Implementation |

|---|---|---|

| Compression | 40-60% smaller downloads | --compressed flag |

| Connection reuse | 30% faster multiple downloads | --keepalive time |

| DNS caching | 10-15% speed improvement | --dns-cache-timeout |

| Parallel downloads | Up to 5x faster | xargs -P technique |

Here's my production-ready performance optimization wrapper:

#!/bin/bash

OPTIMIZED_DOWNLOAD() {

local url="$1"

local output="$2"

# Pre-resolve DNS

local domain=$(echo "$url" | awk -F[/:] '{print $4}')

local ip=$(dig +short "$domain" | head -n1)

# Performance optimization flags

local opts=(

--compressed

--keepalive-time 60

--resolve "${domain}:443:${ip}"

--connect-timeout 10

--max-time 300

--retry 3

--retry-delay 5

)

echo "🚀 Downloading with optimizations..."

curl "${opts[@]}" -o "$output" "$url"

}

# Usage Examples

# Basic optimized download

OPTIMIZED_DOWNLOAD "https://example.com/large-file.zip" "downloaded-file.zip"

# Multiple files (using xargs for parallel downloads)

cat url-list.txt | xargs -P 4 -I {} sh -c 'OPTIMIZED_DOWNLOAD "{}" "$(basename {})"'

# With custom naming

OPTIMIZED_DOWNLOAD "https://example.com/data.zip" "backup-$(date +%Y%m%d).zip"

Pro Tip: These optimizations mirror the techniques used by some headless browser solutions, resulting in significantly faster downloads!

Tip 2: Advanced Error Handling

Drawing from my experience with complex Puppeteer Stealth operations, here's my comprehensive error-handling strategy that's caught countless edge cases:

#!/bin/bash

ROBUST_DOWNLOAD() {

local url="$1"

local retries=3

local timeout=30

local success=false

# Trap cleanup on script exit

trap 'cleanup' EXIT

cleanup() {

rm -f "$temp_file"

[ "$success" = true ] || echo "❌ Download failed for $url"

}

verify_download() {

local file="$1"

local expected_size="$2"

# Basic existence check

[ -f "$file" ] || return 1

# Size verification (must be larger than 0 bytes)

[ -s "$file" ] || return 1

# If expected size is provided, verify it matches

if [ -n "$expected_size" ]; then

local actual_size=$(stat --format=%s "$file" 2>/dev/null || stat -f %z "$file" 2>/dev/null)

[ "$actual_size" = "$expected_size" ] || return 1

fi

# Basic content verification

head -c 512 "$file" | grep -q '[^\x00]' || return 1

return 0

}

# Main download logic with comprehensive error handling

local temp_file=$(mktemp)

local output_file=$(basename "$url")

for ((i=1; i<=retries; i++)); do

echo "🔄 Attempt $i of $retries"

if curl -sS \

--fail \

--show-error \

--location \

--connect-timeout "$timeout" \

-o "$temp_file" \

"$url" 2>error.log; then

if verify_download "$temp_file"; then

mv "$temp_file" "$output_file"

success=true

echo "✅ Download successful! Saved as $output_file"

break

else

echo "⚠️ File verification failed, retrying..."

sleep $((2 ** i)) # Exponential backoff

fi

else

echo "⚠️ Error on attempt $i:"

cat error.log

sleep $((2 ** i))

fi

done

}

# Usage Examples

# Basic download with error handling

ROBUST_DOWNLOAD "https://example.com/file.zip"

# Integration with size verification

expected_size=$(curl -sI "https://example.com/file.zip" | grep -i content-length | awk '{print $2}' | tr -d '\r')

ROBUST_DOWNLOAD "https://example.com/file.zip" "$expected_size"

# Multiple files with error handling

cat urls.txt | while read url; do

ROBUST_DOWNLOAD "$url"

sleep 2 # Polite delay between downloads

done

Why it works: Combines temporary file handling, robust verification, and progressive retries to prevent corrupted downloads.

Pro Tip: Never trust a download just because cURL completed successfully. I once had a "successful" download that was actually a 404 page in disguise! My friend, always verify your downloads!

Tip 3: Security Best Practices

Security isn't just a buzzword - it's about protecting your downloads and your systems. Here's my production-ready security checklist and implementation:

#!/bin/bash

SECURE_DOWNLOAD() {

local url="$1"

local output="$2"

local expected_hash="$3"

# Security configuration

local security_opts=(

--ssl-reqd

--tlsv1.2

--ssl-no-revoke

--cert-status

--remote-header-name

--proto '=https'

)

# Hash verification function

verify_checksum() {

local file="$1"

local expected_hash="$2"

if [ -z "$expected_hash" ]; then

echo "⚠️ No checksum provided. Skipping verification."

return 0

fi

local actual_hash

actual_hash=$(sha256sum "$file" | cut -d' ' -f1)

if [[ "$actual_hash" == "$expected_hash" ]]; then

return 0

else

echo "❌ Checksum verification failed!"

return 1

fi

}

echo "🔒 Initiating secure download..."

# Create secure temporary directory

local temp_dir=$(mktemp -d)

chmod 700 "$temp_dir"

# Set temporary filename

local temp_file="${temp_dir}/$(basename "$output")"

# Execute secure download

if curl "${security_opts[@]}" \

-o "$temp_file" \

"$url"; then

# Verify file integrity

if verify_checksum "$temp_file" "$expected_hash"; then

mv "$temp_file" "$output"

echo "✅ Secure download complete! Saved as $output"

else

echo "❌ Integrity check failed. File removed."

rm -rf "$temp_dir"

return 1

fi

else

echo "❌ Download failed for $url"

rm -rf "$temp_dir"

return 1

fi

# Cleanup

rm -rf "$temp_dir"

}

# Usage Example

SECURE_DOWNLOAD "https://example.com/file.zip" "downloaded_file.zip" "expected_sha256_hash"

Why it works: Provides end-to-end security through parameterized hash verification, proper error handling, and secure cleanup procedures - ensuring both download integrity and system safety.

Pro Tip: This security wrapper once prevented a potential security breach for a client when a compromised server tried serving malicious content. The checksum verification caught it immediately!

These security measures are similar to what we use in our proxy infrastructure to protect against malicious responses.

Bonus: Learn from My Mistakes Using cURL

Here are the top mistakes I've seen (and made!) while building robust download automation systems.

Memory Management Gone Wrong

# DON'T do this

curl https://example.com/huge-file.zip > file.zip

# DO this instead

SMART_MEMORY_DOWNLOAD() {

local url="$1"

local buffer_size="16k"

if [[ -z "$url" ]]; then

echo "Error: URL required" >&2

return 1

}

curl --limit-rate 50M \

--max-filesize 10G \

--buffer-size "$buffer_size" \

--fail \

--silent \

--show-error \

-O "$url"

return $?

}

# Usage

SMART_MEMORY_DOWNLOAD "https://example.com/large-dataset.zip"

Pro Tip: While working on a Python web scraping project with BeautifulSoup that needed to handle large datasets, I discovered these memory optimization techniques. Later, when exploring undetected_chromedriver for a Python project, I found these same principles crucial for managing browser memory during large-scale automation tasks.

Certificate Handling

# DON'T disable SSL verification

curl -k https://example.com/file

# DO handle certificates properly

CERT_AWARE_DOWNLOAD() {

local url="$1"

local ca_path="/etc/ssl/certs"

if [[ ! -d "$ca_path" ]]; then

echo "Error: Certificate path not found" >&2

return 1

}

curl --cacert "$ca_path/ca-certificates.crt" \

--capath "$ca_path" \

--ssl-reqd \

--fail \

-O "$url"

}

# Usage

CERT_AWARE_DOWNLOAD "https://api.example.com/secure-file.zip"

Resource Cleanup

# Proper resource management

CLEAN_DOWNLOAD() {

local url="$1"

local temp_dir=$(mktemp -d)

local temp_files=()

cleanup() {

local exit_code=$?

echo "Cleaning up temporary files..."

for file in "${temp_files[@]}"; do

rm -f "$file"

done

rmdir "$temp_dir" 2>/dev/null

exit $exit_code

}

trap cleanup EXIT INT TERM

# Your download logic here

curl --fail "$url" -o "$temp_dir/download"

temp_files+=("$temp_dir/download")

}

# Usage

CLEAN_DOWNLOAD "https://example.com/file.tar.gz"

Pro Tip: While working on large-scale web crawling projects with Python, proper resource cleanup became crucial. This became even more evident when implementing asynchronous scraping patterns in Python where memory leaks can compound quickly.

The Ultimate cURL Best Practices Checklist

Here's my production checklist that's saved us countless hours of debugging:

...

PRODUCTION_DOWNLOAD() {

# Pre-download checks

[[ -z "$1" ]] && { echo "❌ URL required"; return 1; }

[[ -d "$(dirname "$2")" ]] || mkdir -p "$(dirname "$2")"

# Resource monitoring

local start_time=$(date +%s)

local disk_space=$(df -h . | awk 'NR==2 {print $4}')

echo "📊 Pre-download stats:"

echo "Available disk space: $disk_space"

# The actual download with all best practices

local result=$(SECURE_DOWNLOAD "$1" "$2" 2>&1)

local status=$?

# Post-download verification

if [[ $status -eq 0 ]]; then

local end_time=$(date +%s)

local duration=$((end_time - start_time))

echo "✨ Download Statistics:"

echo "Duration: ${duration}s"

echo "Final size: $(du -h "$2" | cut -f1)"

echo "Success! 🎉"

else

echo "❌ Download failed: $result"

fi

return $status

}

# Usage

PRODUCTION_DOWNLOAD "https://example.com/file.zip" "/path/to/destination/file.zip"

Pro Tip: I always run this quick health check before starting large downloads:

CHECK_ENVIRONMENT() {

local required_space=$((5 * 1024 * 1024)) # 5GB in KB

local available_space=$(df . | awk 'NR==2 {print $4}')

local curl_version=$(curl --version | head -n1)

# Check disk space

[[ $available_space -lt $required_space ]] && {

echo "⚠️ Insufficient disk space!" >&2

return 1

}

# Check curl installation

command -v curl >/dev/null 2>&1 || {

echo "⚠️ curl is not installed!" >&2

return 1

}

# Check SSL support

curl --version | grep -q "SSL" || {

echo "⚠️ curl lacks SSL support!" >&2

return 1

}

echo "✅ Environment check passed!"

echo "🔍 curl version: $curl_version"

echo "💾 Available space: $(numfmt --to=iec-i --suffix=B $available_space)"

}

# Usage

CHECK_ENVIRONMENT || exit 1

Remember: These aren't just theoretical best practices - they're battle-tested solutions that have processed terabytes of data. Whether you're downloading webpages and files or downloading financial data, these practices will help you build reliable, production-grade download systems.

4 cURL Troubleshooting Scripts: Plus Ultimate Diagnostic Flowchart

Let's face it - even the best download scripts can hit snags. After debugging thousands of failed downloads, I've developed a systematic approach to troubleshooting. Let's talk about some real solutions that actually work!

Error 1: Common Error Messages

First, let's decode those cryptic error messages you might encounter:

| Error Code | Meaning | Quick Fix | Real-World Example |

|---|---|---|---|

| 22 | HTTP 4xx error | Check URL & auth | curl -I --fail https://example.com |

| 28 | Timeout | Adjust timeouts | curl --connect-timeout 10 --max-time 300 |

| 56 | SSL/Network | Check certificates | curl --cacert /path/to/cert.pem |

| 60 | SSL Certificate | Verify SSL setup | curl --tlsv1.2 --verbose |

Here's my go-to error diagnosis script:

#!/bin/bash

DIAGNOSE_DOWNLOAD() {

local url="$1"

local error_log=$(mktemp)

echo "🔍 Starting download diagnosis..."

# Step 1: Basic connection test with status code check

echo "Testing basic connectivity..."

if ! curl -sS -w "\nHTTP Status: %{http_code}\n" --head "$url" > "$error_log" 2>&1; then

echo "❌ Connection failed! Details:"

cat "$error_log"

# Check for common issues

if grep -q "Could not resolve host" "$error_log"; then

echo "💡 DNS resolution failed. Checking DNS..."

dig +short "$(echo "$url" | awk -F[/:] '{print $4}')"

elif grep -q "Connection timed out" "$error_log"; then

echo "💡 Connection timeout. Try:"

echo "curl --connect-timeout 30 --max-time 300 \"$url\""

fi

else

echo "✅ Basic connectivity OK"