With the seemingly endless variety of platforms for instant communication these days (Slack, Whatsapp, RCS, and not to forget social media) one could easily forget about the original type of electronic communication - email. Despite regular claims that a new technology will replace email, it continues to thrive and the number of messages keeps going up by about four percent every year. For that reason it may not be surprising that email is a crucial tool for most businesses. Be that for keeping in touch with existing customers, or reaching out to new ones. When done right, email campaigns can prove immensely effective.

High-quality databases of email addresses can contribute a lot to successful marketing campaigns and skyrocket your leads and eventual sales. Though, it is important that such lists are obtained in an ethical, reasonable, and efficient manner. This is what we are going to have a closer look at today.

In this article, we'll be exploring three different ways to scrape emails from websites and compile lists of addresses of prospective customers: with a specialised scraping API, with custom Python code, and with Google Sheets.

💡 Free trial for our scraping API

Did you know, ScrapingBee offers a free trial with 1,000 API requests on the house? No payment or credit card information necessary whatsoever!

Things to keep in mind when scraping emails

Target audience

Emailing random people may work for some cases (please see the next question) but it typically brings the best results if you clearly define the intended audience upfront.

Type of marketing campaign

Should it be a well-researched and targeted campaign or rather mass-mailing to see which customers bite? The latter will certainly also work with many use cases, but it may have a spammy connotation and raise some flags with your mail provider. You also may have to handle an elevated number of abuse complaints. Targeted campaigns require more research but usually lead to better results.

From where to obtain addresses

Before you can scrape addresses, you need to define which sites (or type of sites) are relevant to your audience and where and how to crawl them.

Site navigation and scale

Are you crawling sites with a fixed number of static pages or with a large number of dynamic pages? This may influence your scraping approach.

Bot protection

Do the sites you want to scrape make use of bot-protection technologies? That may involve, for example, CAPTCHAs and might require additional steps and tools (e.g. headless browsers) to pass such security layers.

Cost

The age-old question: how much will this endeavour cost me. You want a return-on-investment, of course. How do the different scraping approaches stack against each other price-wise.

Alternative ways

You may also want to consider approaches completely different from scraping. Maybe there are pre-built and verified lists available for sale.

How to scrape emails from any website - step by step

The following steps show how to scrape an existing site with ScrapingBee's API.

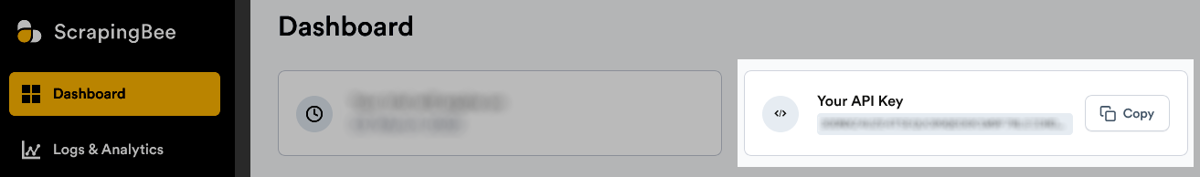

1. Get the ScrapingBee API key

If you haven't signed up for ScrapingBee yet, just head over to the Sign Up page and create your ScrapingBee account with 1,000 free trial requests. Once your account is active, ScrapingBee will automatically credit the free API requests to your account and you can immediately start using them.

The important bit here is the API key. The API key is the central authentication token for ScrapingBee and all you need to do is include it in your requests.

You can access and copy the API key in your ScrapingBee dashboard.

2. Obtain list of relevant sites

Search engines can be a great resource to find sites relevant to you. For example, if your business supplies Chinese restaurants in the New York area, you could use the terms "chinese restaurant new york email contact" to get a list of relevant websites for this context.

To achieve that, you can either crawl Google directly (but pay attention to their bot protection) or you can use ScrapingBee's dedicated API for Google Search and its Request Builder. The API allows you to query Google comfortably with a stable REST interface and without the hassle of having to manually compose search queries, parse HTML, skip ads, and deal with result localisation.

For our example, we simply opened the Google API Request Builder and entered the search terms "chinese restaurant new york email contact". As we want to run this with Python context, we also selected "Python" as output, which provided us with the following code snippet (please pay attention to the API key):

# Install the Python Requests library:

# `pip install requests`

import requests

def send_request():

response = requests.get(

url='https://app.scrapingbee.com/api/v1/store/google',

params={

'api_key': 'API_KEY_HERE',

'search': 'chinese restaurant new york email contact',

'language': 'en',

},

)

print('Response HTTP Status Code: ', response.status_code)

print('Response HTTP Response Body: ', response.content)

send_request()

When running this now, you should receive an answer like the following.

{

"meta_data": {

"url": "https://www.google.com/search?q=chinese+restaurant+new+york+email+contact&gl=us&hl=en&num=100",

"number_of_results": 68300000,

"location": null,

"number_of_organic_results": 100,

"number_of_ads": 0,

"number_of_page": 68300000

},

"organic_results": [

{

"url": "https://www.pinchchinese.com/",

"displayed_url": "https://www.pinchchinese.com",

"description": "Chinese comfort food + wine + cocktails. SoHo NYC ... Location. 177 Prince St, New York, New York 10012. Telephone: 212-328-7880. Email: nihao@pinchchinese.com ...",

"position": 3,

"title": "Pinch Chinese",

"domain": "www.pinchchinese.com",

"sitelinks": [],

"rich_snippet": {},

"date": null,

"date_utc": null

},

{

"url": "http://hwayuannyc.com/contactus.html",

"displayed_url": "http://hwayuannyc.com › contactus",

"description": "Contact. Phone. CALL US. (+1) 212-966-6002 · (+1) 917-969-1338. EMAIL. hwayuannyc@gmail.com. Marker. Address. 42 E Broadway, New York, NY 10002. CONTACT US.",

"position": 5,

"title": "Chinese Cuisine and Noodle Restaurant in NYC",

"domain": "hwayuannyc.com",

"sitelinks": [],

"rich_snippet": {},

"date": null,

"date_utc": null

},

{

"url": "https://chai-nyc.com/",

"displayed_url": "https://chai-nyc.com",

"description": "Click the button below and make a reservation. RecommendedbyRestaurantji · Chai 柴院 | Authentic Chinese Restaurant ... Contact us. (646)-669-8299. Partnership ...",

"position": 7,

"title": "Chai NYC - Hell's Kitchen/Broadway, New York, NY",

"domain": "chai-nyc.com",

"sitelinks": [],

"rich_snippet": {},

"date": null,

"date_utc": null

},

{

"url": "https://hakkacuisine.nyc/",

"displayed_url": "https://hakkacuisine.nyc",

"description": "Hakka Cuisine in Chinatown - The New York Times. Prev ... Address: 11 Division St, New York, NY 10002.",

"position": 8,

"title": "- HAKKA CUISINE",

"domain": "hakkacuisine.nyc",

"sitelinks": [],

"rich_snippet": {},

"date": null,

"date_utc": null

},

{

.... LOTS-MORE-HERE ....

}

],

"local_results": [],

"top_ads": [],

"bottom_ads": [],

"related_queries": [],

"questions": [],

"top_stories": [],

"news_results": [],

"knowledge_graph": {},

"related_searches": []

}

As you can see, some of the entries actually already list email addresses in the description field. But as we want to learn how to scrape addresses from websites, we'll use the list as starting point and use the first site for our next examples: https://www.pinchchinese.com/

3. Scrape email addresses from the page

Similarly to how we queried the list of sites, we can also use ScrapingBee's data extraction API to get the actual email addresses. Once more, a Request Builder allows us to set up the code with only a few clicks.

- Open the HTML API Request Builder in your dashboard.

- Click the "✉️ Extract email addresses from a web page" button to apply the email template and the appropriate CSS selector.

- Enter the URL of the site (

https://www.pinchchinese.com/) into the URL box. - Select "Python SDK".

The preview box should now contain the following Python code (again, please pay attention to use the correct API key):

# Install the Python ScrapingBee library:

# `pip install scrapingbee`

from scrapingbee import ScrapingBeeClient

client = ScrapingBeeClient(api_key='API_KEY_HERE')

extract_rules = {

"email_addresses":{

"selector":"a[href^='mailto']@href",

"type":"list"

}

}

response = client.get(

'https://www.pinchchinese.com/',

params={

"extract_rules": extract_rules,

},

)

print('Response HTTP Status Code: ', response.status_code)

print('Response HTTP Response Body: ', response.content)

Looks good, though what is it doing?

- We first import the ScrapingBee Python library (you need to install it with

pip, if you haven't done so already). - Then, we create an instance of the ScrapingBee client and pass it our API key.

- Next, we define the extract rules using CSS selectors.

- And with the

get()method of our client we run the request and pass it the URL and the extract rules.

Voilà, response.content should now have all the email address of the page 🥳.

Extracting email addresses with regular expressions

Sometimes, addresses are not part of specific <a> elements and you may have to parse the entire document for email addresses. This is where parsing html with regex can come very much in handy.

For that, we just slightly adjust our previous code sample and omit the extract rules but set return_page_source to true instead. With this parameter set, ScrapingBee returns the HTML content itself and we can use re.findall() to get a list of all strings matching the expression.

Let's quickly run this:

# Install the Python ScrapingBee library:

# `pip install scrapingbee`

from scrapingbee import ScrapingBeeClient

import re

client = ScrapingBeeClient(api_key='API_KEY_HERE')

response = client.get(

'https://www.pinchchinese.com/',

params={

"return_page_source": True

}

)

matches = re.findall("([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)", response.content.decode())

print('Addresses found')

print(matches)

Once the script completes, we should have some output like this:

Addresses found

['address1@server.com', 'address2@server.com']

Keep in mind, some sites substitute common address characters, such as @ and ., with alternatives (e.g. AT, [AT], dot, etc). If you are crawling such sites, you should adjust the expression accordingly (e.g. you could use (?:@|\s*\[?AT]?\s*) for the @ sign).

ℹ️ Email syntax

While our regular expression here is perfect for a demo, and will match most email addresses, it may miss some more uncommon formats, which may still be valid addresses nonetheless. If you want to find out more on the full list of valid addresses and formats, you can check out this blog post.

Other methods for scraping emails

Of course there are also other, more manual ways to scrape sites for email addresses. For example, you could write your own custom Python scraper or even use more pragmatic approaches like spreadsheets. Let's check these out!

Email scraping with Python

For starters, let's write our own vanilla Python email scraper, using just standard Python libraries. For this, we'll use the libraries Requests for the network calls, and Beautiful Soup for parsing HTML. Let's get them installed first:

pip install requests beautifulsoup4

With this done, we get a bit of inspiration from our previous Beautiful Soup tutorial Scraping web pages with Python and use its basic code setup as our scraper base.

import requests

from bs4 import BeautifulSoup

import re

response = requests.get("https://www.pinchchinese.com/")

if response.status_code != 200:

print("Error fetching page")

exit()

soup = BeautifulSoup(response.text, "html.parser")

mails = soup.select("a[href^='mailto:']")

print('Addresses using a CSS selector')

print([mail.attrs['href'] for mail in mails])

matches = re.findall("([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)", response.text)

print('Addresses using a regular expression')

print(matches)

Here, we first use Requests to get the actual HTML page, then pass the content to Beautiful Soup, and use a CSS selector with the .select() method to get a list of the relevant anchor tags. In addition, we also pass the content to .findall() in a second step and use the previous regex approach here once more.

If we run this now, we should get something along these lines:

Addresses using a CSS selector

['mailto:address1@server.com', 'mailto:address2@server.com']

Addresses using a regular expression

['address1@server.com', 'address2@server.com']

💡 Scraping in Python with a headless browser

As some sites make heavy use of JavaScript and client-side rendering, you may need a full-fledged browser environment to scrape them. Please take a look at the article Scraping single page applications with Python for more information on that subject. But even here, the basic mail extraction rules shown in this article will still apply.

Email scraping with Google Sheets

You might not believe it, but one can scrape the web even with spreadsheet applications, such as Google Sheets 😲.

For this, we'd use the native IMPORTXML() function provided by Google. This function takes a URL and an XPath expression and returns the matching data. With this, it is rather easy to save a list of URLs to a spreadsheet and use IMPORTXML to get the desired content.

Let's try this with our demo site and use the following formula in Google Sheets!

=IMPORTXML("https://www.pinchchinese.com/", "//a[starts-with(@href, 'mailto:')]/@href")

Once you entered that, Google will take a few seconds to load the page and eventually return the email addresses it found on the page.

The key aspect here is the following XPath expression which selects all <a> tags with an href attribute starting with mailto:.

//a[starts-with(@href, 'mailto:')]/@href

💡 As Google Sheets only supports XPath expressions, we had to switch away from CSS selectors, but the two technologies are pretty similar. Should you want to learn more about them, please check out Practical XPath for Web Scraping. There's also XPath vs CSS selectors which elaborates on the exact differences.

And if you thought scraping with a spreadsheet was unusual, you might find the next approach even more peculiar: scraping with a spreadsheet and regular expressions

By using the built-in REGEXEXTRACT() function, we can easily apply the regular expression from our previous examples here as well. To achieve this, we need to chain the following functions in this order:

- We use

IMPORTDATA()to load the content - With

CONCATENATE(), we create a single string from the response - Now, we pass the content string (along with our regex

[a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+) toREGEXEXTRACT()and should receive any email address we found

Putting this all together, we'd have the following formula for our demo site:

=REGEXEXTRACT(CONCATENATE(IMPORTDATA("https://www.pinchchinese.com/")), "[a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+")

Unfortunately, REGEXEXTRACT() is still a bit limited and only returns the first match, but it is for sure an interesting approach to the topic and may be useful is some scenarios.

Summary

In this article, we took a closer look at how important emails can be for your business, which steps to take to get a list of potential leads from different sources, and how to parse web content specifically for email addresses.

We highly recommend the following articles as follow-up reads:

As always, web scraping can be very straightforward but it can also become quite tricky, once a site employs anti-bot measures. Those may require the use of a proper browser environment, geo-proxy management, rotating IP addresses, and request throttle management on your side.

Our article Web Scraping without getting blocked provides more insight here and please don't hesitate to get in touch with us if you have further questions on how to successfully complete your scraping project. As mentioned earlier, we offer a completely free trial with 1,000 API requests included.

Happy Scraping from ScrapingBee!

Alexander is a software engineer and technical writer with a passion for everything network related.